Saturday, September 30, 2023

Delhi Cops Break Up Child's Birthday Party After Conversion Bid Complaint

Delhi Minister Gopal Rai Orders Probe Into Azadpur Mandi Fire

Centre's Action Plan To Curb Delhi Air Pollution Comes Into Force

Friday, September 29, 2023

One Man Planned, Executed Rs 25-Crore Delhi Heist; Another Thief Did Him In

Minor Girl, Boy Jump In Front Of Moving Train In Uttar Pradesh

Delhi Rs 25 Crore Heist: 3 Accused Arrested, Some Gold Recovered

Thursday, September 28, 2023

Pregnant Woman, 21, Taken To Forest And Set On Fire By Brother, Mother

Google Search Generative Experience adds About this result feature

Google today is adding its About this result feature to the AI-generated answers in the Search Generative Experience (SGE).

What it does. About this result is meant to “give people helpful context, such as a description of how SGE generated the response, so they can understand more about the underlying technology,” according to Google.

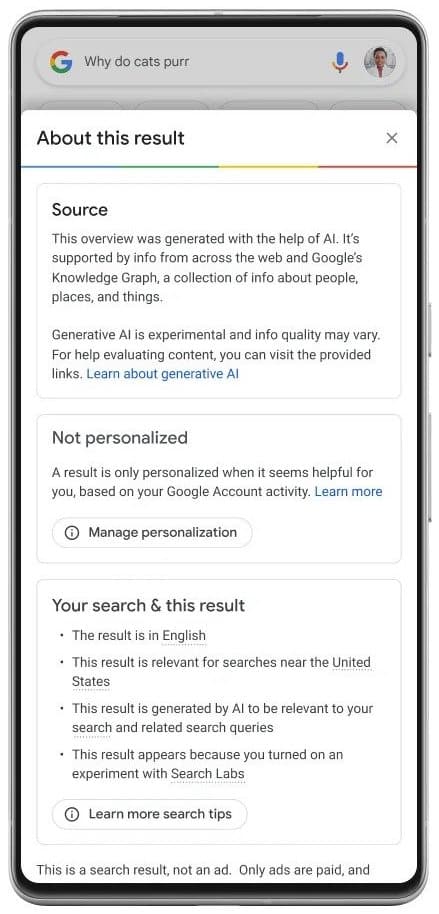

What it looks like. Here’s a GIF showing how this feature works:

In this example, the description says:

- “This overview was generated with the help of AI. It’s supported by info from across the web and Google’s Knowledge Graph, a collection of info about people, places and things. Generative AI is experimental and info quality may vary. For help evaluating content, you can visit the provided links.”

For individual links (soon). About this result will “soon” be added to individual links in Google SGE as well. Here, the purpose will be to give people more information about webpages that backup the SGE answers.

About About this result. Google launched About this result in Search in 2021. It was meant to help searchers learn more about a search result or search feature.

Why we care. While it’s felt like a struggle for Google to cite its sources in SGE and Bard, this highlighting of webpages and content seems like another positive step for highlighting the source from which Google is generating its AI answers.

Targeted improvements in SGE. Google is also working to reduce AI-powered responses that validate “false or offensive premises” in SGE. A “false premise” query is inaccurate or factually incorrect and something Google has worked to reduce in featured snippets. According to Google:

“We’re rolling out an update to help train the AI model to better detect these types of false or offensive premise queries, and respond with higher-quality, more accurate responses. We’re also working on solutions to use large language models to critique their own first draft responses on sensitive topics, and then rewrite them based on quality and safety principles. While we’ve built a range of protections into SGE and these represent meaningful improvements, there are known limitations to this technology, and we’ll continue to make progress.”

– Hema Budaraju, Google senior director, product management, Search / How we’re responsibly expanding access to generative AI in Search

The post Google Search Generative Experience adds About this result feature appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/RQ03i2o

via https://ift.tt/irIM1Qm https://ift.tt/RQ03i2o

Ahead Of Diwali, Gurugram Bans Sale And Use Of Firecrackers From Nov 1

Stuck In Bengaluru Traffic For Hours, He Ordered Pizza And Got It On Time

Wednesday, September 27, 2023

Mumbai Traffic Police Issued Traffic Advisory For Ganpati Visarjan: Details

Fire Breaks Out At Godown In Mumbai's Kurla, 10 Fire Engines On Spot

Meta AI assistant uses Microsoft Bing Search results

Meta AI, announced today, is a new conversational assistant “you can interact with like a person,” according to the company.

Microsoft Bing is working with Meta to “integrate Bing into Meta AI’s chat experiences enabling more timely and up-to-date answers with access to real-time search information,” according to Yusuf Mehdi, corporate vice president and consumer chief marketing officer, in a company blog post.

This includes bringing Bing to Meta AI and Meta’s 28 other new AI characters in Instagram, WhatsApp and Messenger.

What Microsoft is saying. If a request requires fresh information, Meta AI will automatically ask Bing to get the chat answer, according to Jordi Ribas, Microsoft CVP, head of engineering and product for Bing.

- “Many people ask me whether LLMs will make web search engines less valuable, but the opposite is actually the case. LLMs make web search more critical than ever, since the combination of LLMs + web search is what produces fresher and more accurate chat results,” Ribas posted on X.

Why we care. Search continues to expand and is now essentially everything, everywhere, all at once. This integration means Meta’s AI assistant will have access to real-time information, which in theory should produce better chat results via conversational search. And coming soon: businesses will be able to create their own AIs.

What Meta AI looks like. Here’s a GIF Meta shared:

More Meta AIs with Bing. Meta announced it is releasing 28 additional AIs, all with unique personas, that users can interact with on its platforms. Meta said it plans to bring Bing search to these AI characters, many of which are based on celebrities (e.g., Tom Brady, Snoop Dogg, Paris Hilton, Mr Beast) “in the coming months.”

Bing’s role. Meta AI is powered by a custom model that leverages technology from Llama 2 and other large language models. However, Meta’s LLMs are only trained on information prior to 2023, which would lead to dated responses. This is where Bing’s real-time web results will come into play.

More to come. Meta promises that it is working on AIs for businesses and creators that will be available next year. From Meta:

- “Businesses will also be able to create AIs that reflect their brand’s values and improve customer service experiences. From small businesses looking to scale to large brands wanting to enhance communications, AIs can help businesses engage with their customers across our apps. We’re launching this in alpha and will scale it further next year.”

The post Meta AI assistant uses Microsoft Bing Search results appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/gk0ey4W

via https://ift.tt/s5qiAc9 https://ift.tt/gk0ey4W

"Entry Was Friendly": Delhi Cops On Murder Scene Of 65-Year-Old Woman

ChatGPT Browse with Bing returns after being disabled 3 months ago

Open AI has reenabled the Bing search feature it has added to its ChatGPT AI service after temporarily disabling the feature in early July. “ChatGPT can now browse the internet to provide you with current and authoritative information, complete with direct links to sources. It is no longer limited to data before September 2021,” wrote OpenAI on X.

Browse with Bing. Open AI added Bing Search to ChatGPT as a premium service in May. Doing so added to ChatGPT more up-to-date information and timely information powered by Bing Search. ChatGPT has a data set from 2021 or earlier, which is why using Bing Chat provided a better experience for more timely questions. With Bing Search in ChatGPT, the service can “provide timelier and more up-to-date answers with access from the web,” the company wrote.

The service was for ChatGPT Plus subscribers.

Returned on September 27. Open AI explained, “Since the original launch of browsing in May, we received useful feedback. Updates include following robots.txt and identifying user agents so sites can control how ChatGPT interacts with them. Browsing is particularly useful for tasks that require up-to-date information, such as helping you with technical research, trying to choose a bike, or planning a vacation.”

Who can use it. Browse with Bing is available to Plus and Enterprise ChatGPT users today. Open AI said they will “expand to all users soon.”

To enable, choose Browse with Bing in the selector under GPT-4.

Why we care. The Browse with Bing feature in ChatGPT made the service even more useful to searchers and people with questions. Now that this feature is back, it adds more recent information to ChatGPT.

To learn more about this, see our original story over here.

The post ChatGPT Browse with Bing returns after being disabled 3 months ago appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/g8jWRFr

via https://ift.tt/s5qiAc9 https://ift.tt/g8jWRFr

Mobile Phone Charging In House Explodes, Shatters Windows Of Parked Cars

Woman Poses As Real Estate Agent In Navi Mumbai, Dupes Rs 1.87 Crore

Tuesday, September 26, 2023

Google Search at 25: SEO experts share memorable moments

How has it only been 25 years? Am I the only one who feels like there can’t have been a world without Google?!

The search and technology behemoth has undoubtedly impacted most human experience across the globe in some form or another, but none more so than for the SEOs of the world.

The SEO industry effectively shares its history with Google.

Once search engines like Google started to become the primary method through which people accessed information online, it became crucial for businesses to have a digital presence and be listed on search engine results pages (SERPs).

Evolution of the algorithm: Changes in SEO focus

Thanks to ever-evolving algorithms and expectations, search is a relentless journey of adaptation and resilience for SEOs.

Each update feels like a new puzzle, urging the industry to delve deeper, understand better and refine strategies to ensure sites continue to shine organically.

It’s about trying to anticipate the subtleties of algorithm changes, predicting what will come next and constantly innovating to keep content relevant, user-friendly and, importantly, visible.

Every change is a new opportunity to learn. Here are just some of the many algorithm changes that SEO has (mostly) survived, as told by industry veterans themselves.

Penguin update

When we talk about Google shaking things up in the SEO world, the Penguin update of April 2012 is one for the books.

It was the kind of wave that reshaped how we all thought about links and rankings and made us rethink our game plans.

Mark Williams-Cook, co-owner of search agency Candour, has some pretty vivid memories about this time. “The April 2012 Penguin v1 Update… it made such profound and lasting changes in the industry,” he said.

Before Penguin rolled out, the more, the merrier was the mantra regarding links. It was all about piling on those links, and aggressive link building was the way to go.

There were places where you could buy links, and services promising to boost your rankings actually worked! But then, Penguin happened. It was like a switch flipped overnight.

Williams-Cook saw it all – big names in affiliate marketing saw their traffic go down the drain, agencies were getting the boot from clients whose rankings tanked, and suddenly, folks were charging money to remove links. Major web players like Expedia didn’t escape the penalties either.

He shares his own brush with Penguin quite candidly. “I personally had a few of my money websites tank (deservedly), and it was a great lesson in ‘it works until it doesn’t’.”

It’s a reminder of how strategies that seem to work can suddenly backfire if they aren’t sustainable or authentic.

Medic update

Let’s hop back to August 2018, which is likely to be well remembered by many SEO enthusiasts with health-related sites – all thanks to the Medic update.

Brady Madden, founder at Evergreen Digital, had a CBD commerce website under his wing. He enjoyed the fruits of ranking well, with sales soaring over $100,000 monthly.

Then Medic hit, and it was like the rug was pulled out from under him, leading to a loss of around $50,000 in sales in just a month.

“Since this ‘Medic’ update was so new, we didn’t know where or what to address,” Madden said

He and his team took about six months to revamp their strategies, reassess their content, strengthen their link building campaigns and make several other crucial adjustments to bounce back.

“At the time, I had been around SEO and digital marketing for 5+ years… This whole event really got me going with SEO and has become my top marketing channel to do work for since then,” Madden shares as he reflected on the journey that this update had led him on.

Medic was termed a “broad, global, core update” by Google, with a peculiar focus on health, medical, and Your Money Your Life (YMYL) sites.

Google’s advice was straightforward yet frustrating for many – there’s no “fix,” just continue building great content and improving user experience.

The update was a learning curve and a revelation of SEO rankings’ dynamics and core algorithm updates’ impacts.

“I also learned that while they’re tedious to read, docs like Google’s Quality Raters Guidelines provide a wealth of knowledge for SEOs to use,” Madden said, highlighting the invaluable lessons to be learned from Google’s documentation.

Diversity update

Olivian-Claudiu Stoica, senior SEO specialist at Wave Studio, has been in the SEO game since 2015. He fondly remembers this transformative update.

“The Diversity update from 2019 was a nice touch from Google… This clearly helped smaller sites gain visibility,” Stoica said.

The Diversity update ensured a more equitable representation by limiting the presence of the same domain to no more than two listings per search query, striving to bring balance to search results.

It was a shift toward a richer, more diverse search experience, offering users a wider array of perspectives and information sources.

Stoica also sheds light on the updates that profoundly impact businesses today. He highlights the Helpful Content, Spam, and Medic updates as pivotal changes defining the contemporary SEO landscape.

“Holistic marketing, brand reputation, PR-led link building, well-researched content, customer experience, and proven expertise are now the norm to succeed in Google Search,” he said, outlining the multifaceted and often multi-disciplined approach required from modern SEOs.

Product reviews update, May 2022 core update and more

After Penguin, things didn’t get any smoother, and Ryan Jones, marketing manager at SEOTesting, has quite the tale about rolling with the punches of Google’s relentless period of updates.

“The site hovered between 1,000 and 1,500 clicks per day between November 2021 and December 2021,” he shares.

But then, it was like a series of rapid-fire updates came crashing down: December 2021 Product Reviews Update, May 2022 Core Update, and more.

And the impact? It was like watching the rollercoaster take a steep dive.

The traffic went from those nice highs to around 500 clicks per day.

“Between half and more than half of the traffic was gone,” Jones said.

But it wasn’t just about the numbers but about figuring out where the ship was leaking. Turns out, most of the traffic loss was from the blog.

“Google was no longer loving the blog and was not ranking it,” Jones said.

It was a wake-up call, leading to some tough decisions, like removing around 75 blog posts – a whopping 65% of the blog.

It wasn’t just about cutting the losses but about rebuilding and re-strategizing. Diving deep into content marketing, investing in quality writing, and focusing on truly competitive content became the new game plan.

“We also invested in ‘proper’ link building… which we believed helped also,” Jones added.

And the journey didn’t end there. The uncertainty and stress continued with the next series of updates, including the July 2022 Product Reviews Update and the October 2022 Spam Update.

“It took a while, but we achieved a 100% recovery from this update,” Jones said.

Link spam and helpful content updates

Jonathan Boshoff, SEO manager at Digital Sisco, recounts the experience of turbulence when algorithms threw curveballs one after the other.

“In my previous role, I ran SEO for one of Canada’s largest online payday loan companies. We got hit by three algorithm updates in a row (2022 link spam and helpful content, and one in Feb.) This was the comedown from all-time highs. The company was thrilled to be making lots of money with SEO, but the algorithm updates destroyed all our rankings. Essentially, the whole company was scrambling. Everyone became an SEO all of a sudden.”

Boshoff’s story is a snapshot of the collective experiences of the SEO community.

The excitement of top rankings. The scramble when they plummet. And the continuous endeavor to decode and master the changing goalposts.

“Ultimately, another algorithm update rolled out and fixed everything. Since then they have continued on to have even better results than ever before. It was a very hectic couple of months!” he shares, underscoring the resilience and adaptability that define the spirit of SEO professionals.

E-A-T (and later E-E-A-T)

When Google was still in its infancy, the core mission was clear and simple: Create the best search experience possible.

In an era where links ruled the kingdom of page ranking, SEO enthusiasts explored every nook and cranny to master the game.

Enter E-A-T (Expertise, Authoritativeness and Trustworthiness), a concept that’s been in Google’s quality rater guidelines since 2014.

It has been a compass guiding the journey of search engine evolution, with the goal of delivering diverse, high-quality, and helpful search results to the users.

Helene Jelenc, SEO consultant at Flow SEO, who was then exploring the realms of SEO with her travel blog, shares her journey and reflections on the inception of E-A-T.

“When EAT was added to the guidelines, that’s when things began to ‘click’ with SEO for me,” she said.

Jelenc, like many content creators, was striving to offer unique and original content and insights. Her determination to produce well-researched blog posts finally earned recognition and reward from Google.

E-A-T isn’t just about the visible and tangible elements like backlinks and anchor text; it’s about building genuine high-level E-A-T, a journey that requires time, effort and authenticity.

Google values quality, relevance and originality over quick fixes and instant gains. It’s about promoting the “very best” and ensuring that they get the spotlight they deserve.

It’s important to understand that E-A-T and the Quality Rater Guidelines are not technically part of Google’s algorithms.

However, they represent the aspirations of where Google wants the search algorithm to go.

They don’t reveal the intricacies of how the algorithm ranks results but illuminate its aims.

By adhering to E-A-T principles, content creators have a better chance of ranking well, and users get the right advice.

Jelenc’s insights and experiences mirror the broader evolution in the SEO world. In an era riddled with misinformation, especially in areas impacting Your Money or Your Life (YMYL) queries, the principles of E-A-T have become more important than ever.

“Our humanness and the experiences we have inform our content and add layers that make it unique,” she reflects.

Jelenc sees the advancements in E-A-T and the introduction of E-E-A-T (the added “E” being Experience) as steps toward acknowledging the value of human experiences in content creation.

With this, she’s optimistic about Google’s future directions “[I]n light of AI-generated everything, Google dropped E-E-A-T, which, in my opinion, is the single most important update in years.”

Legacy SEO strategies and tactics: It works until it doesn’t

Exact match domains

A pivotal moment in Google Search’s history was the devaluation of exact match domains (EMDs). This move reshaped domain strategies and brought a renewed focus on content relevance and quality.

Daniel Foley, SEO evangelist and director at Assertive Media, shared a memorable encounter that perfectly encapsulates the significance of this shift.

“One of my most memorable moments in SEO was when Matt Cutts announced the devaluation of exact match domains,” he said.

The timing of this announcement was awkward for Foley. He had just secured a number one ranking on Google for an EMD he had purchased, using “free for all” reciprocal links and a page filled with nothing but Lorem Ipsum content!

His sharing of this achievement with then-Googler Matt Cutts on Twitter didn’t earn applause but instead highlighted the loopholes the devaluation aimed to address.

“He wasn’t impressed,” Foley said, recounting how the interaction went “semi-viral at the time.”

This anecdote is more than just a glimpse into the cat-and-mouse game between SEO professionals and Google’s updates. It reflects the continual evolution of SEO strategies in response to Google’s quest for relevance and quality.

The devaluation of EMDs was a clear message from Google – relevance and quality of content are paramount, and shortcuts to high rankings are fleeting.

Backlink profile manipulation

SEO is a wild ride. Sometimes, you can’t help but look at some tactics and just – laugh. Preeti Gupta, founder at Packted.com, has some thoughts on this, especially when it comes to old-school strategies like profile creation and social bookmarking.

“While there are a bunch of outdated things SEOs do, the most fascinating one to me definitely has to be profile creation, social bookmarking, and things like that,” she said.

It’s a funny thing, really. Agencies and SEO folks put in so much time and effort to build these links, thinking it’s the golden ticket.

“Agencies and SEOs waste a lot of their time and resources to build these links and I guess this clears the path for genuine sites," Gupta said. "It’s both funny and sad at the same time.”

This became even more amusing when the December 2022 link spam update rolled out. It pretty much neutralized the impact of this type of link building.

And the irony? The same folks offering these services were now offering to disavow them!

Negative SEO

Let’s jump back to 2015 with Ruti Dadash, founder at Imperial Rank SEO, who was just dipping her toes into SEO at the time.

“What does SEO stand for?” That was her, a complete newbie at a startup, a small fish in a pond teeming with gigantic, well-established competitors with bottomless marketing budgets. SEO became a secret weapon, helping her rank against the giants.

Dadash shares a vivid memory of waking up one day to find that rankings had skyrocketed. Detective work revealed a sneaky move from a rival, thousands of backlinks pointing straight to Dadash’s site.

But this sudden gift was a Trojan horse as all these links were from sketchy gambling and adult sites, a disaster waiting to happen.

“The scramble to put together a new disavow file, and the relief/disappointment as we lost that sudden surge…” You can imagine how this experience shaped her SEO career.

“It’s been a wild ride, from watching how Google has evolved to moving from in-house SEO to freelance, and then to founding my own agency,” Dadash said.

This wild, unpredictable journey makes SEO such a fascinating field, a constant game of cat and mouse with Google’s ever-evolving algorithms.

Hidden text

There was a time when the “coolest” SEO trick in the book was like a magic act – now you see it, now you don’t.

Brett Heyns, a freelance SEO, can't help but reminisce about those days.

“All time best was white text on a white background, filling all the white space with keywords. Boggles the mind that there was a time that this used to work and was ‘standard practice’!” Heyns said.

Hidden text was the stealthy ninja of old-school SEO strategies. In the times when search engines were more like text-matching machines, SEOs could publish one content piece for site visitors and hide another for search engines.

Yes, you heard it right! Web pages were stuffed with invisible keywords, creating long, invisible essays, all in the name of ranking.

Heyns’s memory of this practice takes us back to when consumers would see a conversion-optimized webpage, blissfully unaware of the keyword-stuffed content hidden behind the scenes.

SEOs would ingeniously position white text on white backgrounds or even place images over the text, keeping it concealed from the human eye but visible to the search engine’s gaze.

Some even explored more sophisticated schemes, like cloaking, where scripts would identify whether a site visitor was a search engine or a human, serving different pages accordingly. It was like a clandestine operation, showing keyword-optimized pages to search engines while hiding them from users.

These techniques might sound like relics from the past. Still, they’re a reminder of the evolution of SEO strategies – from the overtly stealthy to the authentically user-centered approaches we value today.

Content scraping and cloning

In the Wild West days of the early internet, SEO was more of an arcane art and less of a science, and it was fertile ground for some pretty wild tactics.

Sandy Rowley, SEO professional and web designer, reminisces about a time when the rules were, well, there weren’t really any rules.

“Back in the '90s, an SEO expert could clone a high traffic website like CNN, and outrank every one of its pages in a week.” Yes, you heard that right – cloning entire high-traffic websites!

This practice, known as scraping, involved using automated scripts to copy all the content from a website, with intentions ranging from stealing content to completely replicating the victim’s site.

It's a prime example of black hat SEO tactics where the cloned sites would appear in search results instead of the original ones, exploiting Google’s ranking algorithms by sending fake organic traffic and modifying internal backlinks.

This advanced form of content theft wasn’t just about plagiarism.

It was about manipulating search engine results and siphoning off the web traffic and, by extension, the ad revenue and conversions from the original sites.

It was a tactic that threw fairness and ethical considerations to the wind, leveraging the loopholes in search engine algorithms to gain a competitive advantage.

New features, plus ones we loved and lost

Seasoned SEOs will all remember (and shed a tear for) at least one tool or feature that has become no more than a relic.

Equally, each will have a story about a new innovation or tactic that they’ve had to quickly comprehend.

Let’s continue our journey through the anecdotes and rifle through Google’s figurative trash can while finding interesting tidbits about current search attributes.

Remember Orkut?

Let’s take a nostalgic stroll down memory lane, back to the days of Orkut with Alpana Chand, a freelance SEO.

Ah, Orkut. Remember it? It was one of the first of its kind, a social media platform even before the era of Facebook, named after its creator, Orkut Büyükkökten.

Orkut was a hub of innovation and connection, especially popular in Brazil and India, offering unique features like customizing themes, having “crush lists,” and even rating your friends.

“I remember Orkut. I used it when I was in engineering college,” Chand said, recalling the days of shared weak Wi-Fi in dorm rooms and big, heavy IBM computers.

Chand paints a picture of when being hooked to Orkut was the norm. “Addicted is understating our obsession,” she said.

And let’s not forget those Yahoo forwards filled with quotable quotes shared on Orkut. Seeing your wall and the new feed popping on it was a different vibe.

“Today's social media features change in the blink of an eye and Orkut's slow stable feature – the wall, the scrapbook, the direct messages were etched on my mind,” Chand shares.

But, like all good things, Orkut had its sunset moment. As platforms like Facebook offered more simplicity, reliability, and innovative features like a self-updating News Feed, Orkut struggled to keep up with its increasing complexity and limited user range.

Eventually, Google shifted its focus to newer ventures, like Google+, and in 2014, it was time to bid Orkut farewell.

“So many memories and an entire memorabilia was buried along with Orkut's discontinuation,” she said, wishing for some saved screenshots of those times.

Hello, featured snippets!

Who doesn’t love a quick answer? That’s what Google’s featured snippets aimed to do, and have done for years now.

Featured snippets, those handy blocks of content directly answering our queries at the top of SERPS, made their grand entrance in January 2014, much to the dismay of SEOs that relied on traffic for even the shortest answers.

They quickly became known as Google’s “answer boxes” or “quick answers,” pulling the most relevant information from web pages and placing it right at the top of our search results.

Megan Dominion, a freelance SEO, remembers the early days of Featured Snippets and the missteps that followed.

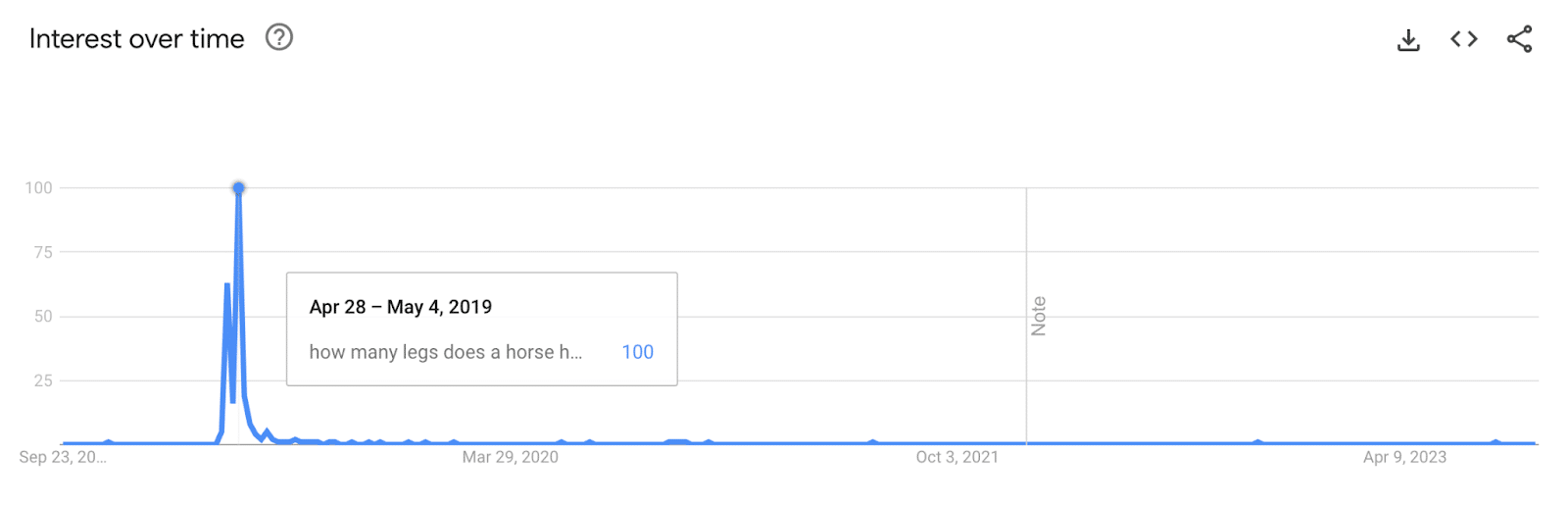

“I remember when the below featured snippets were being spoken about in the SEO industry as well as outside of it,” she said, reminiscing about the time Google got it hilariously wrong.

Just imagine searching for “How many legs does a horse have?” and being told they have six!

“There's a nice spike in search trends for when it happened to commemorate the occasion and the beginning of ‘Google is not always right’,” Dominion points out.

It was a reminder that even the ‘big dogs’ can have their off days. If you fancy a bit of a giggle, check out some more examples of Google’s featured snippets getting it wrong.

Featured snippets, with their prominence and direct answers, became a coveted spot for SEOs and website owners, sparking discussions, strategies, and a slew of articles on optimizing for them.

They were officially named “featured snippets” in 2016, distinguishing them from short answers pulled from Google’s database.

Hreflang havoc

Hreflangs, introduced in 2011, are like signposts for Google’s bots, telling them what language and region a page is intended for.

They help avoid duplicate content issues when creating content that’s essentially the same but for different markets.

When they work, it’s smooth sailing, but when they decide to go off-script, the outcome can be hilarious.

Nadia Mojahed, international SEO consultant at SEO Transformer, had a front-row seat to when hreflang attributes decided to have a bit of fun.

Mohajed recalls when a frantic call revealed an ecommerce site in chaos. This site, which had been doing great globally, was suddenly offering luxury handbags like gourmet dishes.

It’s all thanks to some cheeky hreflang tags. “Imagine Italian pages talking about pasta and French ones describing sneakers as delicacies!” she laughs.

Google turned page titles and descriptions into hilarious misinterpretations. Think “Women's Fashion” in Spanish being read as “Exotic World of Llamas and Ponchos,” and German "Men's Accessories" transforming into “Bratwurst-Tasting Bow Ties.”

Getting hreflang attributes right can be a bit of a puzzle, especially for mega-sites with tons of pages. A 2023 study by fellow SEO Dan Taylor even found that 31% of international sites were getting tangled up in hreflang errors.

Mojahed quickly found and fixed the issues, turning the blunder into a learning moment about the quirky challenges of SEO tech.

Next, please! The rel=prev/next drama

Remember the collective gasp in the SEO community when Google dropped the bomb about rel=prev/next for pagination? Yeah, that was a moment.

Lidia Infante, senior SEO manager at Sanity.io, paints the picture vividly.

“The time Google announced that they hadn't been using rel=prev/next for a while! There was a range of reactions. From freaking out over how to handle pagination now to mourning all the developer hours we sank into managing it through rel=prev/next.”

It turns out this signal had been deprecated for some time before the SEO world was in the know.

Infante and many others soon learned that it had other purposes beyond indexing, and well, Google isn’t the only player in the game.

Other search engines were still using it. It was a reminder moment for many, understanding that Google was not the only search engine they were optimizing for.

This revelation came just before a BrightonSEO edition, and the atmosphere was charged when John Mueller was asked about it on stage during the keynote. “It was a whole vibe,” Infante reminisces.

Realizing the mix-up, Google apologized for not proactively communicating this change.

They assured they’d aim for better communication about such changes in the future, stating:

“As our systems improve over time, there may be instances where specific types of markup are not as critical as it once was, and we’re committed to providing guidance when changes are made.”

Oops!

Google+ or else?!

You’ll be hard-pushed to find an SEO who doesn’t remember Google+ unless they’re super green.

Reflecting on its turbulent journey, Mersudin Forbes, portfolio SEO and agency advisor, shares, “Google+ was a flash-in-the-pan, social media platform pushed on us by Google. So much so that they appended the +1 functionality in search results to make us believe that upvoting the search results increased rankings.”

Google+ emerged with a promise, a beacon of new social media interaction backed by Google’s colossal influence.

Forbes recalls how Google firmly asserted that this feature would be a new signal in determining page relevance and ranking. “This was the start of spammageddon of +1 farming and just as quick as it arrived, it was gone,” he remarks.

The bold venture seemed promising, with innovative features like Circles and Hangouts and rapid user acquisition, reaching 90 million by the end of the launch year.

However, the spammy notifications and the platform’s failure to maintain active user engagement led to its eventual demise.

“I wonder if the same will happen to SGE, one can only hope,” Forbes said.

Google+ now stands as a reminder of the transient nature of tech innovations, even those stamped with the Google seal.

UA who? The reluctant adoption of GA4

When Google Analytics 4 (GA4) debuted, it wasn’t exactly met with a standing ovation from marketers.

It was more like a hesitant applause, with some even hosting metaphorical funerals for its predecessor, Universal Analytics (UA). Yes, it was that dramatic!

Irina Serdyukovskaya, an SEO and analytical consultant, found a silver lining in the upheaval while grappling with the new intricacies of GA4.

“The first months with this were tough,” she admits. But delving into the unknown of GA4 opened up new career paths and opportunities for her.

“After solving GA4 tracking questions within the SEO field, I started offering this as a separate service and found myself enjoying learning new tools and understanding the data better.”

Serdyukovskaya’s journey resonates with many who initially struggled but eventually found potential growth in adapting to the new system.

“Changes are hard, and we get used to the way things are, but this can also be an opportunity to grow professionally,” she reflects.

The collective groan about another change in the digital world symbolizes both resistance to change and a concealed desire for novelty and learning.

Despite the reluctant adoption and the initial hiccups, the majority eventually boarded the GA4 train, with more than 90% of marketers making the transition, albeit with varying levels of readiness and acceptance.

It wasn’t just a switch but a complete migration to a new platform built from scratch, requiring strategic planning and expert implementation.

GA4 wasn’t merely a new “version.” It was a paradigm shift in how data was collected, processed, and reported.

And with Google sunsetting UA, the reluctant adaptation became necessary, pushing many to leave the comfort of the familiar and step into the unknown, just like Irina did.

Reflections and looking forward

That’s a wrap on 25 years – a trip down memory lane with the seasoned SEO pros who've been through the thick and thin of Google's ever-evolving world.

We’ve dived deep into the archives, laughed over the old wild days, and scratched our heads over the relentless changes and updates we’ve all seen and felt.

Huge thanks to all the SEO pros who chipped in with their tales, insights, and lessons for this article. Your shared experiences give us all a closer, more colorful look at the twists and turns that have made the world of SEO what it is today. It’s been a wild ride, and it’s awesome to hear about it from the folks who’ve lived it!

So, what about the next 25 years?

Will AI and Search Generative Experience (SGE) revolutionize how we search and measure SEO success? Will SEO undergo drastic transformations, or heaven forbid, will it finally die as has been foretold many times? Or, in a plot twist, will we all just surrender and switch allegiances to Bing?

It’s a fascinating future to contemplate – a future where the integration of AI could reshape our interaction with online spaces, making searches more intuitive, personalized, and responsive.

SGE could redefine user engagement, creating more immersive and dynamic experiences.

Will we still be chasing algorithms, or will the advancements enable a more harmonious coexistence where the user experience is paramount? SEO is more about enhancing this experience than outsmarting the system.

Whatever the future holds, here’s to embracing the challenges, exploring new frontiers, and navigating another 25 years of dancing with Google.

Happy 25th, Google! Keep those surprises coming, and keep us guessing!

The post Google Search at 25: SEO experts share memorable moments appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/NSsJo0v

via https://ift.tt/LtownRG https://ift.tt/NSsJo0v

Has Google Ads lost all credibility? Why one advertiser says it’s time to leave

Google stunned the PPC community after openly admitting to quietly adjusting ad prices to meet targets. The confession comes eight years after Google Ad executive Jerry Dischler denied the search engine manipulates ad auctions at SMX Advanced in 2015.

The backtracking has infuriated digital marker Gregg Finn – so much so that he now believes advertisers should consider leaving Google. But will others follow?

Here’s a recap of Greg’s comments from the Marketing O’Clock podcast.

Google has lost all trust and credibility

- “This is disheartening. First of all, the biggest detriment is that Google has lost all trust and credibility.”

- “All you have in life is your word.”

- “Google has admitted to trying to hit quarterly numbers so that the morale of its people living in high-cost areas is up – but that’s at the expense of the small businesses that it pretends to care about.”

- “Money doesn’t just appear, it comes out of every small business and big business’ pocket that is using Google’s platform.”

Stop calling it an auction

- “We all knew this was going on, but you want to give people the benefit of the doubt.”

- “Google says it cares about advertisers and all these other marketing lies, but the minute it has to hit these marks, the staff ratchet up the spend, and they ratchet the revenue up to 5% or 10%.”

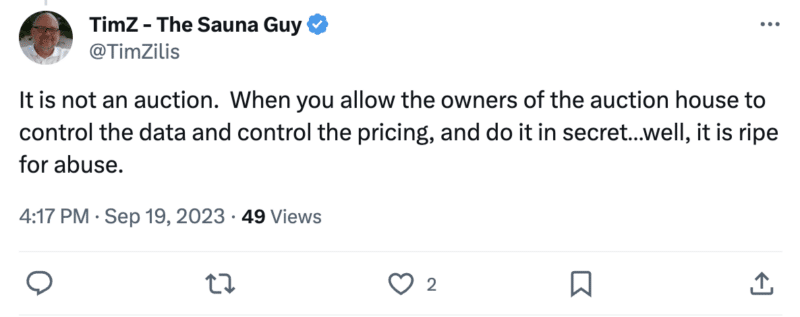

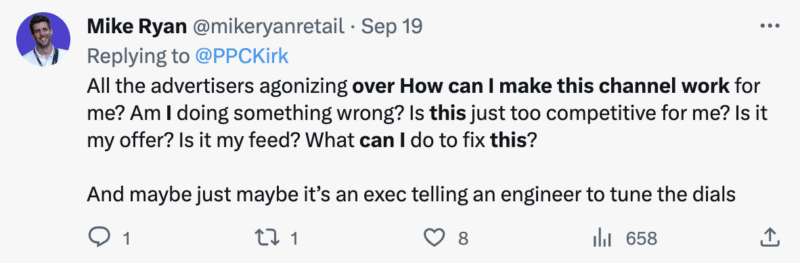

- Greg went on to share some of the reactions he’d seen from the PPC community on X (formerly known as Twitter), which he appeared to support:

Stop lying

- Greg read out a post on X (formerly known as Twitter) from ex Googler Ben Kruger, which read:

- “Guys they are running a business, they can do whatever they want with their prices just like you and your clients can.”

- Greg called Ben’s words “fair” in parts, but called Google out for its lack of transparency. with advertisers

- “You can do whatever you want [with your prices], but just don’t tell me that you’re doing things in my best interests.”

- “You can no longer say that, and I can no longer trust you on anything that you say or do because you’re out here, tuning up, and shaking the cushions to hit quarterly profit numbers.”

Google’s terminology is ‘misleading at best’

- “It’s fine if retailers increase their margin on the backend, but in Google’s case, this is marketed as auctions with bids and bid strategies.”

- “That terminology is increasingly misleading at best.”

- “There are factors outside of your control and factors that only matter to the sales team that live in high-income areas that are going to hurt your campaign.”

- “I guarantee Google has never moved costs down 5%.”

RGSP is ‘ridiculous’

- Google Ad executive, Jerry Dischler, said during the federal antitrust trial that RGSP boosted Google’s revenue by switching the auction so that the second-place bidder was in the top advertiser slot and the initial winner was in the second position.

- Dischler told the court: “We flip them, otherwise Amazon would always show up on top.”

- Greg branded this approach “ridiculous”. He added: “People are out here bidding. What world are we living in? What world is this? It’s ridiculous.”

People should leave!

- “This whole thing just makes me so unbelievably sad. I’ve read everything and heard all the reactions – and I know it’s going to get progressively worse as the years come by.”

- “People should leave. There should not be one ounce of trust that any advertiser has in Google Ads or in any of its platforms, speech, any of its propaganda or anything it puts out.”

- “Google has lost all credibility with this. There is nothing left.”

- “Everyone thinks I’m a Google conspiracist, and everyone thinks I’m nuts – but I’m not. I’m right on this. And that’s why everyone is so hesitant to switch to auto-applied recommendations or use AI or PMax. Google is doing these things to make more money, and they’re admitting it in court. Sad.”

Deep dive. Read our Google antitrust trial updates for all the latest developments from the federal trial.

The post Has Google Ads lost all credibility? Why one advertiser says it’s time to leave appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/4zgjywO

via https://ift.tt/LtownRG https://ift.tt/4zgjywO

RBI Cancels License Of Nashik Zilla Girna Sahakari Bank

Google to block Bard’s shared chats from showing in Google Search

Google will soon block Bard’s shared conversations from appearing in Google Search. Google Bard recently came out with shared conversations that let users publicly share the chats they had with Bard. Soon after Google Search started to discover those URLs, crawl them, and index them.

The issue. Gagan Ghotra posted on X a screenshot showing how a site command for site:bard.google.com/share returned results from Bard. Those results were shared conversations. Here is a screenshot of Bard showing up in the Google Search index:

Google will block Bard results from Google Search. I asked Google’s Search Liason, Danny Sullivan, about this and he responded on X saying, “Bard allows people to share chats, if they choose. We also don’t intend for these shared chats to be indexed by Google Search. We’re working on blocking them from being indexed now.”

Google will soon remove Bard’s shared conversations from showing in Google Search.

Why we care. So if you see Bard’s shared conversations showing up in Google Search, don’t fret it, they should go away in the coming days. Google said it does not want them to show up in Google Search’s index and they are working on blocking them now.

The post Google to block Bard’s shared chats from showing in Google Search appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/DLtV8n6

via https://ift.tt/LtownRG https://ift.tt/DLtV8n6

How CCTVs, Strongroom Failed To Stop Rs 25-Crore Heist At Delhi Jeweller's

Bengal Man Comes To Delhi To Buy Apples, Kidnapped By Friend For Ransom

Monday, September 25, 2023

Bengaluru Strike Today, Check Transport Options Before Travelling

3 In Custody After Teen Gang-Raped In Rajasthan, Body Thrown Into Well

Russia Says It Downs Seven Ukrainian Drones Over Belgorod, Kursk Regions

All Schools, Colleges Closed In Bengaluru Tomorrow Due To Bandh

Former Googler: Google ‘using clicks in rankings’

“Pretty much everyone knows we’re using clicks in rankings. That’s the debate: ‘Why are you trying to obscure this issue if everyone knows?'”

That quote comes from Eric Lehman, a former 17-year employee of Google who worked as a software engineer on search quality and ranking. He left Google in November.

Lehman testified last Wednesday as part of the ongoing U.S. vs. Google antitrust trial.

If you haven’t heard this quote yet, expect to hear it. A lot.

But. That’s not all Lehman had to say. Google’s machine learning systems BERT and MUM are becoming more important than user data, he said.

- “In one direction, it’s better to have more user data, but new technology and later systems can use less user data. It’s changing pretty fast,” Lehman said, as reported by Law360.

Lehman believes Google will rely more heavily on machine learning to evaluate text than user data, according to an email Lehman wrote in 2018, as reported by Fortune:

- “Huge amounts of user feedback can be largely replaced by unsupervised learning of raw text,” he wrote.

User vs. training data. There was also a confusion around “user data” vs. training data” when it came to BERT. Big Tech on Trial reported:

“DOJ’s attempt to impeach Lehman’s testimony also seemed to backfire. In response to a DOJ question about whether Google had an advantage in using BERT over competition because of its user data, Lehman testified that Google’s ‘biggest advantage in using BERT’ over its competitors was that Google invented BERT. DOJ then put up an exhibit titled ‘Bullet points for presentation to Sundar.’ One of the bullets on this exhibit said the following (according to my notes): ‘Any competitor can use BERT or similar technologies. Fortunately, our training data gives us a head-start. We have the opportunity to maintain and extend our lead by fully using the training data with BERT and serving it to our users…’

This likely would have been an effective impeachment of Lehman if “training data” meant some kind of user data. But after DOJ concluded its re-direct examination, Judge Mehta asked Lehman what “training data” referred to. Lehman explained it was different from user search data.”

What is it like to compete against Google?

Sensitive Topics. Lehman was also asked by DOJ attorney Erin Murdock-Park about a slide from one of his slide decks on “Sensitive Topics” that instructed employees to “not discuss the use of clicks in search…”

According to reporting from Big Tech on Trial (via X), Lehman said “we try to avoid confirming that we use user data in the ranking of search results.”

The reporter X post says “I didn’t get great notes on this, but I think the reason had something to do with not wanting people to think that SEO could be used to manipulate search results.”

that “we try to avoid confirming that we use user data in the ranking of search results.” I didn’t get great notes on this, but I think the reason had something to do with not wanting people to think that SEO could be used to manipulate search results.

— Big Tech on Trial (@BigTechOnTrial) September 20, 2023

Google = liars? Since discovering this testimony, SEOs have been quick to use Lehman’s quotes as definitive proof that Google has been lying about using clicks or click-through rate for all of its 25 years.

The question of whether Google uses clicks was the first question asked last week during an AMA with Google’s Gary Illyes at Pubcon Pro in Austin. Illyes answer was “technically, yes,” because Google uses historical search data for its machine-learning algorithm RankBrain.

Technically yes, translated from Googler speak, means yes. RankBrain was trained on user search data.

We know this because Illyes already told us this in 2018. He said RankBrain “uses historical search data to predict what would a user most likely click on for a previously unseen query.”

RankBrain was used for all searches, impacting “lots” of them, starting in 2016.

Google Search tracks everything. But the fact that Google tracks clicks in Search does not mean clicks are used as a direct ranking factor. In other words, if site A gets 100 clicks and site B gets 101 clicks, then site B automatically jumps up to Position 1.

Much like how Google uses its people to rate the quality of its search results, Google is likely using clicks to rate the results for queries and train its ranking systems.

Why we care. Does Google use clicks? Yes. But again, probably not as a ranking signal (thought admittedly I can’t say that with 100% certainty as I don’t work at Google or have access to the algorithm). I know clicks are noisy and easy to manipulate. And for many sites/queries, there simply wouldn’t be enough data to evaluate to make it a useful ranking signal for Google.

Dig deeper. The biggest mystery of Google’s algorithm: Everything ever said about clicks, CTR and bounce rate

The post Former Googler: Google ‘using clicks in rankings’ appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/wASbtFm

via https://ift.tt/3H4oKUd https://ift.tt/wASbtFm

5 outdated marketing KPIs to toss and what to reference instead

Moving away from conventional KPIs and toward a more advanced understanding of your campaigns gives you real competitive advantages.

I could have written about this topic years ago, but it’s especially important as engagement costs on major advertising channels continue to increase, and an unpredictable economy puts a premium on efficiency.

Ready to change the way you measure your campaigns? In this article, I’ll look at five KPIs I still hear clients reference and explain:

- Why it’s past time to replace them.

- What they should analyze instead.

- Why it matters.

Bad KPI 1: Spend

What to use instead: Profit

I’m not saying the concept of a budget is moot, but spend should not be the starting point or goal for campaigns unless:

- You’re just beginning and have no CRM data to reference.

- You’re going for scale without regard to efficiency.

That said, we still get companies coming to us frequently and saying, “We’d like to spend this.”

Even more off-base, “We’d like to spend {x} on Google, {y} on Facebook, and {z} on LinkedIn.”

A better approach is to aim for efficiency goals, agnostic of channel.

If you start with an ROI goal of 3.0, good analytics folks will be able to crunch numbers and tell you how much you can spend and stay within that goal – no matter which channel you spend it on.

Referencing spend without tracking efficiency is how you hit growth walls (and get on the wrong side of your CFO).

Specifying spend across channels is a good way to doom yourself to the fate of spending too much on certain channels and not enough on other, more incremental sources of revenue.

If you are going for scale without regard to efficiency, metrics like conversions, spending, revenue, and visitors do become more important, while CPA and ROAS (efficiency metrics) will take a hit.

A core tenet of digital marketing is that the more conversions you get, the more expensive they are, so you’ll have to decide whether your first goal is improving efficiency or driving scale.

Bad KPI 2: Platform-provided CPA

What to use instead: CRM-based CPA

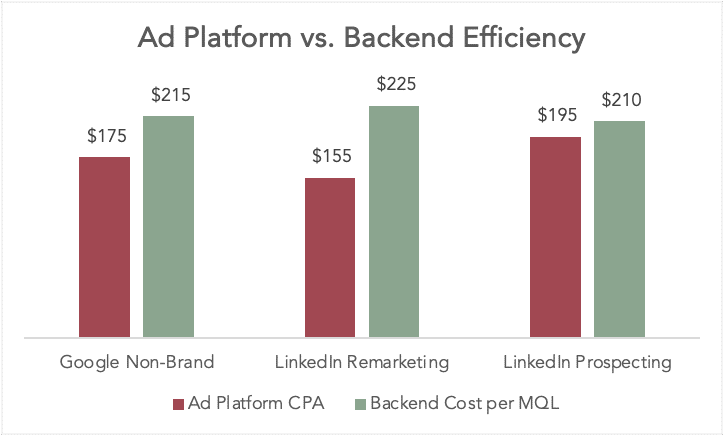

Relying solely on CPAs delivered by Google Ads, Facebook and LinkedIn without assessing the quality of those acquisitions (leads in B2B, purchases in ecommerce) makes it likely you’re spending too much on the wrong leads.

(Note: Google Search Partners and display campaigns produce particularly weak lead quality.)

Instead, integrate your CRM data to understand cost per down-funnel metrics (for B2B) or cost per CLTV (B2C and ecommerce).

This is especially important for B2B, given its long sales cycles and purchase stages.

Knowing what you’d like to pay for opportunities and understanding what you have to pay to acquire them on certain channels is more important than straight-up lead acquisition.

And it’ll make you more likely to swallow high CPCs (hello, LinkedIn) if the resulting leads carry enough value.

Dig deeper: 3 steps for effective PPC reporting and analysis

Bad KPI 3: Click-based CPA

What to use instead: Incrementality-based CPA

Click-based CPA (think first-click, last-click, or cookie or UTM-based MTA) ignores the contributions of impressions-based advertising campaigns, whether it’s a YouTube a, a programmatic ad or a billboard you sponsored on a highway near one of your target geos.

True CPA is based on incrementality, which implements things like the halo effect, brand lift testing, geo lift testing, etc.

It means being agnostic to clicks vs. impressions and understanding the true effect of any advertising interaction.

This can be relatively complex to set up. Still, there are native tools, like Facebook lift tests and Google’s CausalImpact R package using Bayesian structural time-series models, that can be a good starting point.

I recommend figuring out how much data you need to draw a statistically significant conclusion and only running these initiatives in test locations so you’re not curtailing entire campaigns while you assess their effects.

Bad KPI 4: Average CPA/Average ROAS

What to use instead: Marginal CPA/Marginal ROAS

When you’re using Marginal CPA, you’re really trying to figure out what you paid to acquire marginal returns – which means you’re calculating the return on each conversion, not just assuming you pay the same or get the same for all new customers.

Let’s illustrate this with a simple scenario: say you’re taking an average CPA from Facebook ads, which brought in a mix of expensive and cheaper customers, all worth roughly the same revenue amount.

If you take the average CPA, you might see that you spent $2 to acquire a new customer, whereas marginal CPA might show that you converted a bunch of new customers at $1.50 and a handful at $8.

Rather than turn up the dial across the board, it’d be smarter to keep finding more cost-effective customers like the first bunch. Don't spend more to reach more expensive customers who provide no additional value.

Bad KPI 5: Impression share lost to bidding (search)

What to use instead: Impression share lost to budget (search)

If you are running search campaigns and want to lower spend, there are two main ways to do it.

- You drop bids or targets to decrease CPCs.

- You lower the campaign’s daily budget, which forces the campaign to turn off for portions of the day.

When you drop bids or targets and lose impression share, a lower CPC will help produce more clicks and conversion opportunities for the same budget.

I’ve seen brands use bidding strategies with goals of capturing something like 90% of available impression share (IS), which gives Google the green light to overcharge.

In these scenarios, switching to manual CPC targets and aiming lower (thereby losing some impression share) immediately tunes up performance and efficiency.

When you drop your budget, the campaign will hit the daily budget and turn off. This will lower overall spend and impression share but keep the same efficiency. So keep budgets up and control spend using bids and efficiency targets!

There are far-reaching implications when you embrace this "scale vs. efficiency" mindset.

Let’s say you are a B2B company that always sees poor performance on weekends. Instead of turning the weekends off, lower the bids/targets until the traffic is profitable.

Next steps

Some of these – especially the first and last – should be easy to implement right away. Others may need you to find a trusted analytics resource to help you sketch out some models and integrate the right data.

But by reading this far, you’ve already taken the first step: casting a critical eye on boilerplate KPIs that aren’t helping you truly optimize the effectiveness of your marketing campaigns.

One word to the wise: make sure you’re getting the right people on board before you pull the switch on any of these since people leaning on the old KPIs to gauge your work should be in alignment with what success looks like going forward.

Dig deeper: Tracking and measurement for PPC campaigns

The post 5 outdated marketing KPIs to toss and what to reference instead appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/r0LfBF6

via https://ift.tt/3H4oKUd https://ift.tt/r0LfBF6

1 Lakh Cameras And A Helipad: Hyderabad Police's New 'War Room'

Sunday, September 24, 2023

Class 10 Boy Dies After Falling From 15th Floor Of Noida High-Rise: Cops

1 Labourer Killed, Another Injured As Wall Collapses In Uttarakhand

Maharashtra Man Strangles Wife To Death Over Suspected Infidelity: Cops

PM Modi Flags Off Jharkhand's 2nd Vande Bharat Express

Saturday, September 23, 2023

Bengaluru Strike Over Cauvery Issue On Tuesday: What's Open, What's Not

12-Year-Old Kolkata Girl Tests Positive For Dengue, Dies In Hospital

4, Including Paralysed Woman, Die Due To Flooding In Rain-Hit Nagpur

Private Firm Employee Arrested For Embezzlement Of Rs 85 Crore In Gurugram

"Politically Connected" Delhi Man Dies By Suicide; Needed Money, Say Cops

Friday, September 22, 2023

DUSU Election Results: Counting Of Votes In DU Students Union Polls Begins

Jharkhand Woman Out For Walk With Fiance Gang-Raped, 5 Arrested: Cops

Delhi Woman Pours Acid On Daughter-In-Law, Arrested: Cops

Google accused of downplaying ad price manipulation

Google has been accused of downplaying how much it quietly increases ad auctions.

The search engine admitted at the federal antitrust trial that it “frequently” inflates ad prices by as much as 5% without telling advertisers – sometimes 10%.

But marketers are calling the search engine out for being too “conservative” with these figures as they believe the real number is significantly higher.

Why we care. Advertisers are becoming increasingly frustrated with Google due to long-held suspicions around ad price manipulation and a lack of transparency. Although the industry accepts the search engine has a right to set minimum pricing thresholds, the lack of transparency regarding how those thresholds change over time and can directly impact advertiser performance

Shady business. Christine Yang, vp of media at Iris, told Ad Week that she believes the real range of fluctuation can sometimes be as much as 100%. She said:

- ““[Google] claiming 5% is a more conservative number to make it sound like the natural ebb and flow of a marketplace,” said Christine Yang, vp of media at Iris.”

- “The level to which [price manipulations] happens is what we don’t know. It’s shady business practices because there’s no regulation. They regulate themselves.”

Why quietly inflating ad prices matters. Google’s ability to increase ad prices, especially without facing strong competition, could potentially bolster the Justice Department’s claim that Google maintains an unlawful monopoly. While this argument doesn’t apply to Google’s free search engine, it can be used to address concerns like privacy standards that might have been mitigated in a more competitive search industry.

What has Google said? Following Dischler's comments, a Google spokesperson told Search Engine Land:

- "Search ads costs are the result of a real-time auction where advertisers never pay more than their maximum bid. We’re constantly launching improvements designed to make ads better for both advertisers and users."

- "Our quality improvements help eliminate irrelevant ads, improve relevance, drive greater advertiser value, and deliver high quality user experiences."

Deep dive. Read our Google search antitrust trial updates article for all the latest news from the courtroom.

The post Google accused of downplaying ad price manipulation appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/QJtHRIf

via https://ift.tt/qmHkQTU https://ift.tt/QJtHRIf

Thursday, September 21, 2023

UP Trade Fair, MotoGP: Noida Police Revises Advisory For Goods Carriers

AMA with Google’s Gary Illyes: 15 quick SEO takeaways

Google’s Gary Illyes fielded several questions during an AMA at Pubcon Pro in Austin this afternoon from moderator Jennifer Slegg. Here are some of the highlights of the interview.

1. Does Google use user click data in ranking?

“Technically, yes,” Illyes said. This is because historical search data is part of RankBrain.

2. Unlaunches happen ‘a lot’

Things change a lot (emphasis his) in search ranking at Google, Illyes said. What is true for ranking today could be wrong in two weeks.

Google is known for experimenting with Search – and noted that it’s very hard to keep track of launches and “unlaunches,” adding that these unlaunches happen “a lot.”

This makes sense when you consider Google rolled out 5,000 changes to Search in 2021 and ran a total of 800,000 experiments.

3. Factors vs. signals vs. systems

The main difference between factors and signals is just language, Illyes said. At one point, they wanted people to differentiate between them.

Ranking systems are more complex – this is when Google takes multiple signals (e.g., from crawling and indexing data) and ranks them. Ranking systems are also more “stable than signals,” Illyes said.

4. Why Google doesn’t index everything

In short, the Internet is “insanely big,” Illyes said. There are probably hundreds of trillions of webpages that Google has sight of – but there are even more than that Google can’t access (e.g., content behind a login page).

- “There is virtually no storage you can use to index all of them. It’s not possible to index it in a way you can serve it,” Illyes said.

5. You don’t have to label AI content for Google

Labeling AI content is not necessary for Google search – “I don’t think we care” – Illyes said. But he suggested labeling it if your users would appreciate it.

Humans can cause more trouble than AI on certain topics, Illyes noted. He reiterated once again that Google doesn’t care how content is created – by AI, human or both.

“As long as I will learn from it, learn correct information, why would it matter?” Illyes said.

6. AI content = no typos

One thing Illyes noticed while analyzing the output of ChatGPT and generative AI tools is that it doesn’t have typos.

- “Computers don’t make mistakes unless they were instructed to,” he said.

7. Why niche sites were impacted by helpful content update

When it comes to helpful content, niche sites often don’t fall into a category Google is looking to promote. To be clear, here Illyes was not referring to all types of niche sites, he was mostly talking about affiliate-type of niche sites that are heavily money-driven.

8. You can see gains between core updates

If your site is impacted by a Google core update, you should start working on things that could help your site improve and get pushed back up in Search results.

- “Maybe the next core update will help you more. Don’t think of updates in isolation. We’re using hundreds of signals to rank pages,” Illyes said.

He said waiting and holding your breath between core updates would be bad for your website’s health.

9. Comments could signal that a site has an active community

While many websites removed comment sections and forums in the past 10 years, Illyes said that comments can be good.

- “Especially if I know the site has a strict rule about how users can behave on the site, then I would trust info from those users more,” Illyes said.

Illyes didn’t say comments are a ranking signal. He was more saying this from his own perspective. And it was an interesting insights, especially considering how moderated user-generated sites like Reddit and Quora have seen gains following recent Google updates.

10. Google will keep launching updates in December

Google will continue to release updates during the holidays – Google used to avoid doing this, but Illyes called that an “old thing.”

- “The problem is around that time, everyone tries to manipulate search results. With these updates we’re trying to course correct. I don’t think we should stop releasing updates in that period of time. Honestly I would hope that the updates will actually help you rank better if you were not trying do manipulate search results.”

11. Core Web Vitals = low priority

Short but sweet:

- “If you don’t have anything better to do on your site, go do Core Web Vitals. Most sites won’t see benefit playing around with it,” Illyes said.

12. No voice data in GSC

Illyes said it was too difficult to get voice search data and doesn’t see a reason to add it to Google Search Console. He said it would take a “considerable amount of engineering time” to expose that data.

13. Expired domain signals are not inherited

If a domain expires, and somebody buys that domain, any signals the site had accumulated will not be transferred to the new domain owner. Google knows when a domain expires.

So if you bought an expired domain and tried to rebuild it (e.g., by getting all the content from Wayback Machine), you would be building the site from scratch, as if it were a new domain.

14. Use H tags for accessibility

From Google’s perspective, it would be “pretty stupid” to rely on H1-6 tags for understanding order and hierarchy of content, Illyes said.

He suggested using a screen reader on your site to make sure the content doesn’t “read wrong.” Use H tags where you need to use them, where it makes sense, Illyes said.

15. Importance of links is ‘overestimated’

Links are not a “top 3” ranking signal and hasn’t been “for some time,” Illyes said, adding that there really isn’t a universal top 3.

It’s absolutely possible to rank without links, Illyes said, citing an example of a page with zero internal or external links that he knew of that was ranking Position 1 on Porsche cars – and Google had only found the page via a sitemap.

Content continues to be the number one ranking signal.

- “Without content it literally is not possible to rank. If you don’t have words on page you’re not going to rank for it. Every site will have something different as the top 2 or 3 ranking factors,” Illyes said.

The post AMA with Google’s Gary Illyes: 15 quick SEO takeaways appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/vBqkeZ7

via https://ift.tt/x4m6oDC https://ift.tt/vBqkeZ7