Tuesday, January 31, 2023

Delhi's Minimum Temperature At 8.6 Degrees, Air Quality Moderate

This day in search marketing history: February 1

Google accuses Bing of copying its search results

In 2011, the headline Google: Bing Is Cheating, Copying Our Search Results appeared first on Search Engine Land.

As Danny Sullivan reported: “Google has run a sting operation that it says proves Bing has been watching what people search for on Google, the sites they select from Google’s results, then uses that information to improve Bing’s own search listings.”

The story actually began in May 2010, when Google noticed that Bing was returning the same sites as Google when someone would enter unusual misspellings. By October 2010, the results for Google and Bing had a much greater overlap than in previous months. Ultimately, Google created a honeypot page to show up at the top of 100 “synthetic” searches (queries that few people, if anyone, would ever enter into Google).

This story got picked up by dozens of media outlets as both companies got into a public war of words and blog posts.

Google called Bing’s search results a “cheap imitation.”

Meanwhile, Bing called the sting operation a “spy-novelesque stunt” and defended their monitoring of consumer activity to influence Bing’s search results.

And all this happened on the same day as Bing’s Future of Search Event, where the Google-Bing dispute raged on in person.

Oh what a day.

Also on this day

Auto-tagging added to Google Merchant Center free listings

2022: Tagging added URL parameters to your tracking URLs for better analytics and measurements.

Microsoft rolls out portfolio bid strategies and automated integration with Google Tag Manager

2022: The portfolio feature automatically adjusted bidding across multiple campaigns to balance under- and over-performing campaigns that shared the same bidding strategy.

Google Search launches about this result feature

2021: The feature helped searchers learn more about the search result or search feature they were interested in clicking on.

Google adds Black-owned business label to product results

2021: The label read “identifies as Black-owned” and showed in the product listing results within Google Shopping.

Marketers say COVID vaccines create hope for quick return of in-person events

2021: Marketers were seeing an end to conditions that have made business travel to training seminars, conferences and trade shows unsafe.

Video: Dawn Beobide on Google confirmed vs unconfirmed algorithm updates

2021: In this installment of Barry Schwartz’s vlog series, he also chatted with Beobide about the page experience update.

Bing Ads rolls out multiuser access with single sign-on

2018: Multi-User Access allowed users to have one email and password to access all of the Bing Ads accounts they manage.

Restaurant owners can now edit menu listings in Google My Business

2018: This made it possible to create a structured restaurant menu for display in mobile search listings directly in Google My Business.

Google Assistant adds new media capabilities ahead of HomePod release

2018: You could now wake up to a favorite playlist and use voice to pick up where you left off with Netflix shows.

Google wins ‘right to be forgotten’ case in Japanese high court

2017: Japan’s high court ruled that search results are a form of speech entitled to protection.

Google’s Fake Locksmith Problem Once Again Hits The New York Times

2016: Companies, often not based in the local area, set up fake locations within Google Maps to trick the algorithm into thinking they were locally based.

Valentine’s Day Searches Start Now: When They’ll Peak Depends On The Category

2016: Data from Bing provided key trends on Valentine’s Day-related searches — including gifts, candy, flowers, restaurants and jewelry — and ad performance.

Google Search iOS App Adds “I’m Feeling Curious” To 3-D Touch

2016: Hard pressing on the app brought up a menu for the “I’m Feeling Curious” button.

Google Expanding Candidate Cards, Will Also Offer Primary Voting Reminders

2016: In search and Google Now, users were able to get a combination of candidate-generated content and third-party content about the primaries and the election.

Where Yahoo Might Again Compete In Search: Mobile

2014: With the right content and user experience, Yahoo could have generated new “search” usage and ad revenue from mobile.

Google Settles With France: No ‘Link Tax,’ But €60 Million Media Fund

2013: The settlement ended several months of debate over France’s plan to charge Google for linking to French news content.

Google Submits Formal European Antitrust Settlement Proposal

2013: The proposal was required to address four “areas of concern” – “search bias” and “diversion of traffic”; improper use of third-party content and reviews by Google; third-party publisher exclusivity agreements; and portability of ad campaigns to other search platforms.

Microsoft Sued By Company That Won Patent Lawsuit Against Google In 2012

2013: The two patents (originally issued in the early 1990s and owned by Lycos, which later sold them) pertained to the ranking and placement of ads in search results.

The Lead Up To the Super Bowl: How Are We Searching?

2013: Answer: On multiple devices. For information on the football teams, recipes and snacks.

Search In Pics: The Google Business Card Collection, Silver Android Statue & Golden Gate Bridge Pin

2013: The latest images showing what people eat at the search engine companies, how they play, who they meet, where they speak, what toys they have and more.

French Court Fines Google $660,000 Because Google Maps Is Free

2012: Google remained “convinced that a free high-quality mapping tool is beneficial for both Internet users and websites.”

Google Pledges Crack Down on Unscrupulous AdWords Resellers

2011: Under the terms, agencies had to provide their clients with metrics on costs, clicks and impressions on AdWords at least monthly, if not more often.

Blekko Bans Content Farms From Its Index

2011: Blekko decided to ban the top 20 spam sites from its index entirely, including ehow.com.

Speculation, Intrigue Surround Google’s Delayed Cloud-Tunes Music Service

2011: Licensing issues had delayed the service, despite Google offering boatloads of cash to music labels.

Google Finally Adds Check-Ins To Latitude, With A Couple Twists

2011: Latitude could send reminders to check-in at locations when you arrive, supported automated check-ins at places and automatically checked you out when you left a location.

Google New Local Ad Category Invades The “7 Pack”

2010: The local business ad (“enhanced listings“) allowed a business to stand out with an “enhanced” presence on the map or in the map-related listings on the SERP.

The Latest On Google News Sitemaps

2010: News publishers had through April 2010 to modify their news sitemap to accommodate the new protocol.

Report: Google To Bring More “Transparency” To AdSense Revenue Sharing

2010: Google sales boss Nikesh Arora reportedly said that Google would consider giving more transparency about revenue splits in AdSense.

AP & Google Reach A Deal – Sort Of

2010: Google and the Associated Press reached an agreement allowing Google to continue using AP content. But whether this is a long-term agreement was unclear.

SEOmoz Leaves The Consulting Business To Focus On Software

2010: The SEO agency had recently launched Open Site Explorer and offered a variety of SEO tools.

Can Google Kill Microsoft’s Internet Explorer 6?

2010: Google announced they would be discontinuing their support for “very old browsers.”

US Appeals Court Allows Google Street View Trespass Lawsuit To Continue

2010: The Boring couple had first sued Google in early 2008 for taking pictures of their suburban Pennsylvania home, which was on a clearly marked private road.

2000 In Review: AdWords Launches; Yahoo Partners With Google; GoTo Syndicates

2010: Major events from the year 2000 in consumer search.

Can Searchers Find The Superbowl?

2009: Search engines and websites still had lots of room to improve in order to connect with searchers.

Scoring The Superbowl Ads & Search: Do Broadcast Marketers Get Online Acquisition?

2009: It was the year of the microsite.

Microsoft Makes $45 Billion Bid To Buy Yahoo

2008: Microsoft was ready to bid $31 per share to Yahoo’s board of directors to purchase the company, a deal potentially worth $45 billion. (Yahoo ultimately rejected this bid – and it wouldn’t be the last time.) More coverage of the bid:

- Live Blogging Microsoft’s Bid For Yahoo Call

- Microsoft Call: “We Love The Yahoo Brand” [But Can The Deal Happen?]

- MSFT + YHOO: What Would Microsoft Yahoo Look Like?

- Q&A With Microsoft On Proposed Yahoo Purchase: 2+2 = #1

- Microsoft + Yahoo: Recapping Reactions

Mine The Web’s Socially-Tagged Links: Google Social Graph API Launched

2008: The API allowed developers to discover socially-labeled links on pages and generate connections between them.

“Open Network” A Reality, C Block Of 700MHz Spectrum Hits $4.6 Billion “Reserve Price”

2008: Effectively, the rules required that “any legal consumer device” must be allowed to access the C Block broadband network.

Up Close With Yahoo’s New Delete URL Feature

2007: Pages would continue to be crawled, they just wouldn’t get indexed.

Google News Engine Bugging Out

2007: It was returning 500 errors, graphics weren’t loading and searches weren’t working.

National Pork Board Goes After Breastfeeding Search Marketer

2007: The National Pork Board thought one of her project’s T-shirts violated their trademark on the phrase “The Other White Meat.”

Google Fensi: Google’s Asian Social Networking Site?

2007: Was it a social network? A game? Or service. We may never know.

Marchex Launches Review Aggregation Feature ‘Open View’

2007: Open View aggregated user and expert reviews and generates a dynamic summary in more or less a single paragraph.

Google To Kick Off X Prize’s Next Fundraising Campaign

2007: X Prize’s mission was to “create radical breakthroughs for the benefit of humanity.”

Aussies Turn Out For Battle Of Sydney; Google Gets Shot Down

2007: Google’s flyover to snap imagery of the Sydney landscape and residents didn’t go as planned.

From Search Marketing Expo (SMX)

Past contributions from Search Engine Land’s Subject Matter Experts (SMEs)

These columns are a snapshot in time and have not been updated since publishing, unless noted. Opinions expressed in these articles are those of the author and not necessarily Search Engine Land.

- 2019: Angular Universal: What you need to know for SEO by John Lincoln

- 2019: Learn how to manage product unavailability without hurting your SEO by Max Cyrek

- 2018: What the ROAS? A practical guide to improving return on ad spend by Jacob Baadsgaard

- 2018: What’s going on with Google brand CPC? by Andy Taylor

- 2017: Refreshing competitive search strategies in 2017 by Thomas Stern

- 2017: The desktop’s death has been greatly exaggerated: How it’s holding its own in a mobile world by Christi Olson

- 2016: 7 Ways Small Businesses Can Leverage Third-Party Apps for Local Search & Marketing by Wesley Young

- 2016: 10 Local Link Building Tips for 2016 by Greg Gifford

- 2013: SEO Smackdown Round 2: Old Vs. New Search Engine Optimization by Shari Thurow

- 2013: A Foolproof Approach To Writing Complex Excel Formulas by Annie Cushing

- 2012: Justifying Conference Attendance For In-House Search Marketers by Kelly Gillease

- 2012: Working The Funnel: Finding Value In Non-Converting Events by Benny Blum

- 2011: A Link Building Blueprint: The Foundation by Debra Mastaler

- 2011: Yandex & Seznam: Local Powers That Be In Europe by Bas van den Beld

- 2011: 5 Social Sites You May Not Have Heard About (Yet) by Greg Finn

- 2010: Overcoming The SEO Challenges Of Huge Online Commerce Sites by Eric Enge

- 2010: Broad Match + Negative Keywords = A Profitable Long Tail by Brad Geddes

- 2010: Six Odd Tactics For Getting Ads Into Google Maps by Chris Silver Smith

- 2008: Two Approaches To Determining Intent: The Wisdom Of Crowds And Personal Values by Gord Hotchkiss

- 2007: Linking The Unlinkable: When Digg Won’t Work by Nick Wilson

< January 31 | Search Marketing History | February 2 >

The post This day in search marketing history: February 1 appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/WLdyJBS

via https://ift.tt/ToLbhfB https://ift.tt/WLdyJBS

Webinar: Kickstart your first-party data strategy by Cynthia Ramsaran

With the inevitable demise of cookies, marketers are scrambling for ways to deliver personalized experiences with user consent, all without compromising convenience or security. Today, many are turning to the login box as a critical first step in executing an effective first-party data strategy. It’s no longer just a security requirement – it’s now the doorway into their brand’s digital user experience.

Join Salman Ladha, senior product marketing manager from Okta, as he shares techniques that marketing and digital teams can use to remove friction from their login experience – leading to improved acquisition, retention, and increased revenues.

Register today for “Kickstart Your First-Party Data Strategy,” presented by Okta.

Click here to view more Search Engine Land webinars.

The post Webinar: Kickstart your first-party data strategy appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/yF82Qch

via https://ift.tt/ToLbhfB https://ift.tt/yF82Qch

Twitter announces the end of CoTweets

Twitter has just announced the end of CoTweets. They put out a statement in their Help Center that the new feature, which started in July, will be sunset by the end of the day on January 31, 2023.

A CoTweet is a tweet that has two authors’ profile pictures and user names. A CoTweet appears on both users’ profiles and is shown to all of their followers.

Twitter warned us about this. When the CoTweet experiment was announced, it was also made known that this may not be a permanent feature and that it could go away.

Why we care. This feature was a short-lived experiment with the potential for brands and individuals to reach wider audiences. Clearly, Twitter deemed the feature a loser under Elon Musk after not meeting expectations.

The post Twitter announces the end of CoTweets appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/pdWjMq3

via https://ift.tt/ToLbhfB https://ift.tt/pdWjMq3

Monday, January 30, 2023

Fire At Scrapyard Near Pune Hospital, 19 Patients Evacuated

This day in search marketing history: January 31

Google releases URL Inspection Tool API

In 2022, Google released a new API under the Search Console APIs for the URL Inspection Tool. The new URL Inspection API let you programmatically access the data and reporting you’d get from the URL Inspection Tool but through software.

Google’s URL Inspection Tool API had a limit of 2,000 queries per day and 600 queries per minute.

Google provided some use cases for the API:

- SEO tools and agencies can provide ongoing monitoring for important pages and single page debugging options. For example, checking if there are differences between user-declared and Google-selected canonicals, or debugging structured data issues from a group of pages.

- CMS and plugin developers can add page or template-level insights and ongoing checks for existing pages. For example, monitoring changes over time for key pages to diagnose issues and help prioritize fixes.

Within a week, several SEO professionals developed free new tools and shared scripts, and established SEO crawlers integrated this data with their own insights, as Aleyda Solis rounded up in 8 SEO tools to get Google Search Console URL Inspection API insights.

Also on this day

Google Search Console error reporting for Breadcrumbs and HowTo structured data changed

2022: This change may have resulted in seeing more or less errors in your Breadcrumbs and HowTo structured data Search Console enhancement and error reports.

Recommendations roll out to Discovery campaigns

2022: Google Ads also launched auto-applied recommendations for manager accounts and more recommendations for Video campaigns.

Google Ads creates unified advertiser verification program

2022: Google would combine its advertiser identity and business operations verification programs under a unified Advertiser verification program.

Deepcrawl launches technical SEO app for Wix

2022: Designed for small and mid-sized enterprises, the app automated weekly site crawls and detects issues ranging from broken pages to content that doesn’t meet best-practice guidelines for SEO.

Pinterest rolls out AR ‘Try on’ feature for furniture items

2022: The augmented reality feature, called “Try On for Home Decor,” let users see what furniture looked like in their home before buying.

Meta descriptions and branding have the most influence on search clickthrough, survey finds

2020: The majority of participants also agreed that rich results improved Google search.

Microsoft: Search advertising revenue grew slower than expected last quarter

2020: LinkedIn sessions grew faster than the previous three quarters, though revenue growth slowed slightly in the second quarter of its fiscal 2020.

Bing lets webmasters submit 10,000 URLs per day through Webmaster Tools

2019: Previously you were able to submit up to 10 URLs per day and maximum of 50 URLs per month. Bing increased these limits by 1000x and removed the monthly quota.

Client in-housing, competition for talent top digital agency concerns

2019: Marketing Land’s Digital Agency Survey found the sector was weathering digital transformation well, but the growth of data-driven marketing made it clear where they needed to hire.

Quora adds search-like keyword targeting, Auction Insights for advertisers

2019: Quora introduced three new metrics (Auctions Lost to Competition, Impression Share, Absolute Impression Share) to help advertisers understand how they performed in the ad auctions.

Google’s Page Speed Update does not impact indexing

2018: Indexing and ranking are two separate processes – and this specific algorithm had no impact on indexing.

Bing Ads has a conversion tracking fix for Apple’s Intelligent Tracking Prevention

2018: Advertisers had to enable auto-tagging of the Microsoft Click ID in their accounts to get consistent ad conversion tracking from Safari.

Google EU shopping rivals complain antitrust remedies aren’t working

2018: They demanded more changes, saying their problems have intensified rather than improved since the EC ruling in June 2017.

Google mobile-friendly testing tool now has API access

2017: Developers could now build their own tools around the mobile-friendly testing tool to see if pages are mobile-friendly.

AdWords IF functions roll out for ad customization as Standard Text Ads sunset

2017: IF functions arrived to let advertisers customize ads based on device and retargeting list membership.

Google launches Ads Added by AdWords pilot: What we know so far

2017: Ads based on existing ad and landing page content were added to ad groups by Google.

New AdWords interface alpha is rolling out to more advertisers

2017: It would roll out to even more AdWords accounts in the next few months.

Majestic successfully prints the internet in 3D in outer space

2017: The 3D printer worked in outer space, and the Majestic Landscape was printed at the International Space Station.

Nadella Would Bring Search Cred To Microsoft CEO Role

2014: For the prior three years Nadella had run Microsoft’s Server & Tools business. Before that he was in charge of Bing and online advertising.

Bing Ads Editor Update Gives The Lowly “Sync Update” Window Real Functionality

2014: The sync window showed the total number of changes and the number of those that had been successfully downloaded from or posted to the account.

What Time Does Super Bowl 2014 Start? Look Up!

2014: Google showed the start time at the top of its results.

Who’s Tops? Bud Light Is Unseated As Number One Super Bowl Advertiser On Google And Bing

2014: Volkswagen garnered the top spot with the most ad impressions on Google.

Search In Pics: The Simpsons With Google Glass, Oscar Mayer Car At Google & Google Military Truck

2014: The latest images culled from the web, showing what people eat at the search engine companies, how they play, who they meet, where they speak, what toys they have, and more.

Adwords For Video Gets Reporting Enhancements

2013: Google added three new measurement features to the AdWords for video reporting interface (Reach & Frequency, Column Sets Tailored to Marketing Goals, Geographic Visualization).

Jackie Robinson Google Baseball Player Logo

2013: The Doodle honored Robinson for his 94th birthday.

Google & Bing: We’re Not Involved In “Local Paid Inclusion”

2012: A program that guaranteed top listings for local searches on Google, Yahoo and Bing? An “officially approved” one in “cooperation” with those search engines? Not true, said Google and Bing.

Report: Search Ad Spend To Rise 27% In 2012

2012: Search ad spend was expected to grow 27% from 2011 to 2012, up from $15.36 billion to $19.51 billion. And by 2016, it was expected to reach almost $30 billion annually.

Matt Cutts Convinces Some South Korean Govt. Websites To Stop Blocking Googlebot

2012: Cutts managed to singlehandedly convince some government reps to let Googlebot crawl and index their websites.

DOJ Exploring “Search Fairness” With Google As Rivals Protest Potential ITA Licensing Deal

2011: FairSearch.org opposed any such potential licensing deal.

Review Sites’ Rancor Rises With Prominence of Google Place Pages

2011: Google’s relationship with review sites like TripAdvisor and Yelp was as complicated as ever.

Google’s Android Now “The World’s Leading Smartphone Platform”: Report

2011: More Android handsets were shipped in Q4 2010 than other platforms.

Blekko Launches Mobile Apps For iPhone, Android

2011: Slashtags and the personalization that Blekko offered were even better suited to the mobile search use case in some respects.

Topsy Social Analytics: Twitter Analytics For The Masses (& Free, Too)

2011: You could analyze domains, Twitter usernames, or keywords — and they can be compared over four timeframes: one day, a week, two weeks or a month.

Google Executive Believed Missing After Egypt Protests

2011: Wael Ghonim went missing not long after tweeting about being “very worried” and “ready to die.”

Apple CEO: Google Wants To “Kill The iPhone”

2010: “We did not enter the search business,” Jobs said. “They entered the phone business. Make no mistake they want to kill the iPhone. We won’t let them.”

Google Gets Fearful, Flags Entire Internet As Malware Briefly

2009: Due to a human error, Google told users “This site may harm your computer” for every website listed in search results.

Google Revenues Up 51 Percent, Social Networking Monetization “Disappointing”

2008: Google’s Q4, 2007 revenues were $4.83 billion, compared with $3.21 billion the year before.

Google’s Marissa Mayer On Social Search / Search 4.0

2008: How the search engine was considering using social data to improve its search results.

Report: Click Fraud Up 15% In 2007

2008: The overall industry average click fraud rate rose to 16.6% for Q4 2007. That was up from 14.2% for the same quarter in 2006, and 16.2% in Q3 2007.

New “Show Search Options” Broadens Google Maps

2008: A pull-down menu allowed users to narrow or expand results for the same query and more easily discover non-traditional content in Google Maps.

Google’s Founders & CEO Promised To Work Together Until 2024

2008: Spoiler alert: Schmidt left Google’s parent company Alphabet for good in February 2020.

Google Reports Revenues Up 19 Percent From Previous Quarter

2007: Google reported revenues of $3.21 billion for Q4 2006, representing a 67% increase over Q4 2005 revenues of $1.92 billion

Google Pushes Back On Click Fraud Estimates, Says Don’t Forget The Back Button

2007: Google’s Shuman Ghosemajumdersome said third-party auditing firms don’t appear to be

matching up estimated fraud figures with refunds or even actual clicks registered by advertisers.

Google, Microsoft, & Yahoo Ask For Help With International Censorship

2007: The search engines had to make “moral judgments” about international authorities’ requests for information when they do not have to do the same for US requests.

Yahoo To Build New Keyword Research Tool & Wordtracker Launches Free Tool

2007: YSM’s public keyword research tool was sporadically offline, but Yahoo had plans to offer a new public keyword research tool.

Gmail Locks Out User For Using Greasemonkey & Reports Of Gmail Contacts Disappearing

2007: The account was disabled for 24 hours due to “unusual usage.”

Yahoo To Build “Brand Universe” To Connect Entertainment Brands

2007: Brand Universe would create about 100 websites built around entertainment brands and pull together content from various Yahoo properties.

Google Can’t Use “Gmail” Name In Europe

2007: Due to a trademark of the term.

Boorah Restaurant Reviews: Zagat On Steroids

2007: Boorah collected reviews from existing local search and content sites, summarized and enhanced the data and built additional features on top.

Q&A With Stephen Baker, CEO Of Reed Business Search

2007: What was new with Zibb, a B2B search engines, and the opportunities he saw going forward in B2B search.

January 2007: Search Engine Land’s Most Popular Stories

From Search Marketing Expo (SMX)

Past contributions from Search Engine Land’s Subject Matter Experts (SMEs)

These columns are a snapshot in time and have not been updated since publishing, unless noted. Opinions expressed in these articles are those of the author and not necessarily Search Engine Land.

- 2019: Getting started with Google Search Console by Detlef Johnson

- 2017: The PPC industry would not exist under Trump’s immigration policy by Frederick Vallaeys

- 2017: How machine learning impacts the need for quality content by Eric Enge

- 2017: How to go above and beyond with your content by Julie Joyce

- 2014: Single Page Websites & SEO by Tom Schmitz

- 2014: 4 Content Marketing Strategies That Still Build Links by Nate Dame

- 2013: German Parliament Hears Experts On Proposed Law To Limit Search Engines From Using News Content by Mathias Schindler

- 2013: The Christmas Jump, Tablet Hump & CPC Bump: Recent Trends In Mobile Usage by Siddharth Shah

- 2013: 9 SEO Quirks You Should Be Aware Of by Tom Schmitz

- 2013: Will Facebook’s Graph Search Be Big For Bing Advertisers? by Mark Ballard

- 2012: The Ultimate Guide To Enterprise SEO: 25 Things To Know Before You Take The Plunge by Brian Provost

- 2012: 3 Essential Features For Multinational Content Delivery by Chris Liversidge

- 2012: Link Building Tool Review: WordTracker Link Builder by Debra Mastaler

- 2012: 12 Steps To Optimize A Webpage For Organic Keywords by George Aspland

- 2012: Google+ Growing Your Social Network: Quantity vs. Quality by Aaron Friedman

- 2011: The Rise And Fall Of Content Farms by Eric Enge

- 2011: 6 Tactics That May Put You At Risk Of Being Banned From AdWords by Brad Geddes

- 2011: Advanced Development Should Be The Future For Yellow Pages by Chris Silver Smith

- 2008: 5 Reasons Why Rankings Are A Poor Measure Of Success by Jill Whalen

- 2008: Making a Good Impression With About Us Pages by Bill Slawski

- 2008: Internet Yellow Page Video SEM: Worth The Effort? by Grant Crowell

< January 30 | Search Marketing History | February 1 >

The post This day in search marketing history: January 31 appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/st6ploO

via https://ift.tt/DgU9LRQ https://ift.tt/st6ploO

New updates for the GA4 search bar

Google has released three new updates for the GA4 dashboard, allowing advertisers to find information about current properties or accounts.

Dig deeper. The following updates were posted by Google on their Analytics Help documentation.

Find data stream details

The following search terms allow you to open the details for a web or app data stream in the property you are using:

- the keyword “Tracking”

- a web stream measurement ID (i.e., “G-XXXXXXX”)

- an app stream ID (i.e., “XXXXXXX”)

Find the current property and account settings

The following search terms allow you to open the settings for the property you are using:

- the keyword “Property”

- the current property ID or property name

The following search terms allow you to open the settings for the account you are using:

- the keyword “Account”

- the current account ID or account name

Go to other Google Analytics 4 properties

The following search terms allow you to navigate to a different Google Analytics 4 property from the one you are using. Analytics shows you up to 7 properties that match the search query.

- the property ID or property name of the other property

- a web stream measurement ID (i.e., “G-XXXXXXX”) in the other property

- an app stream ID (i.e., “XXXXXXX”) in the other property

Why we care. The additional information will help advertisers analyze streams, accounts, and properties in their GA4 accounts.

The post New updates for the GA4 search bar appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/A1Rab3Y

via https://ift.tt/DgU9LRQ https://ift.tt/A1Rab3Y

Yandex scrapes Google and other SEO learnings from the source code leak

“Fragments” of Yandex’s codebase leaked online last week. Much like Google, Yandex is a platform with many aspects such as email, maps, a taxi service, etc. The code leak featured chunks of all of it.

According to the documentation therein, Yandex’s codebase was folded into one large repository called Arcadia in 2013. The leaked codebase is a subset of all projects in Arcadia and we find several components in it related to the search engine in the “Kernel,” “Library,” “Robot,” “Search,” and “ExtSearch” archives.

The move is wholly unprecedented. Not since the AOL search query data of 2006 has something so material related to a web search engine entered the public domain.

Although we are missing the data and many files that are referenced, this is the first instance of a tangible look at how a modern search engine works at the code level.

Personally, I can’t get over how fantastic the timing is to be able to actually see the code as I finish my book “The Science of SEO” where I’m talking about Information Retrieval, how modern search engines actually work, and how to build a simple one yourself.

In any event, I’ve been parsing through the code since last Thursday and any engineer will tell you that is not enough time to understand how everything works. So, I suspect there will be several more posts as I keep tinkering.

Before we jump in, I want to give a shout-out to Ben Wills at Ontolo for sharing the code with me, pointing me in the initial direction of where the good stuff is, and going back and forth with me as we deciphered things. Feel free to grab the spreadsheet with all the data we’ve compiled about the ranking factors here.

Also, shout out to Ryan Jones for digging in and sharing some key findings with me over IM.

OK, let’s get busy!

It’s not Google’s code, so why do we care?

Some believe that reviewing this codebase is a distraction and that there is nothing that will impact how they make business decisions. I find that curious considering these are people from the same SEO community that used the CTR model from the 2006 AOL data as the industry standard for modeling across any search engine for many years to follow.

That said, Yandex is not Google. Yet the two are state-of-the-art web search engines that have continued to stay at the cutting edge of technology.

Software engineers from both companies go to the same conferences (SIGIR, ECIR, etc) and share findings and innovations in Information Retrieval, Natural Language Processing/Understanding, and Machine Learning. Yandex also has a presence in Palo Alto and Google previously had a presence in Moscow.

A quick LinkedIn search uncovers a few hundred engineers that have worked at both companies, although we don’t know how many of them have actually worked on Search at both companies.

In a more direct overlap, Yandex also makes usage of Google’s open source technologies that have been critical to innovations in Search like TensorFlow, BERT, MapReduce, and, to a much lesser extent, Protocol Buffers.

So, while Yandex is certainly not Google, it’s also not some random research project that we’re talking about here. There is a lot we can learn about how a modern search engine is built from reviewing this codebase.

At the very least, we can disabuse ourselves of some obsolete notions that still permeate SEO tools like text-to-code ratios and W3C compliance or the general belief that Google’s 200 signals are simply 200 individual on and off-page features rather than classes of composite factors that potentially use thousands of individual measures.

Some context on Yandex’s architecture

Without context or the ability to successfully compile, run, and step through it, source code is very difficult to make sense of.

Typically, new engineers get documentation, walk-throughs, and engage in pair programming to get onboarded to an existing codebase. And, there is some limited onboarding documentation related to setting up the build process in the docs archive. However, Yandex’s code also references internal wikis throughout, but those have not leaked and the commenting in the code is also quite sparse.

Luckily, Yandex does give some insights into its architecture in its public documentation. There are also a couple of patents they’ve published in the US that help shed a bit of light. Namely:

- Computer-implemented method of and system for searching an inverted index having a plurality of posting lists

- Search result ranker

As I’ve been researching Google for my book, I’ve developed a much deeper understanding of the structure of its ranking systems through various whitepapers, patents, and talks from engineers couched against my SEO experience. I’ve also spent a lot of time sharpening my grasp of general Information Retrieval best practices for web search engines. It comes as no surprise that there are indeed some best practices and similarities at play with Yandex.

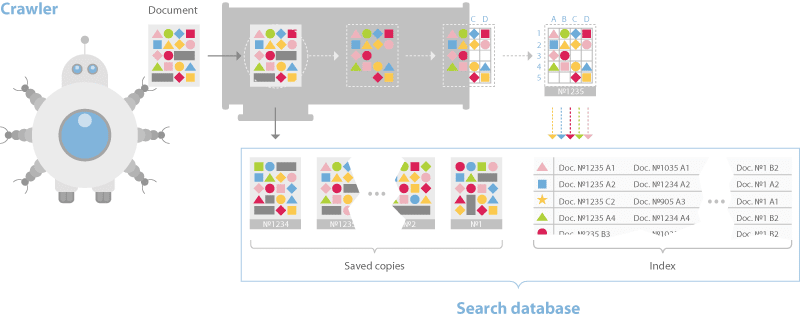

Yandex’s documentation discusses a dual-distributed crawler system. One for real-time crawling called the “Orange Crawler” and another for general crawling.

Historically, Google is said to have had an index stratified into three buckets, one for housing real-time crawl, one for regularly crawled and one for rarely crawled. This approach is considered a best practice in IR.

Yandex and Google differ in this respect, but the general idea of segmented crawling driven by an understanding of update frequency holds.

One thing worth calling out is that Yandex has no separate rendering system for JavaScript. They say this in their documentation and, although they have Webdriver-based system for visual regression testing called Gemini, they limit themselves to text-based crawl.

The documentation also discusses a sharded database structure that breaks pages down into an inverted index and a document server.

Just like most other web search engines the indexing process builds a dictionary, caches pages, and then places data into the inverted index such that bigrams and trigams and their placement in the document is represented.

This differs from Google in that they moved to phrase-based indexing, meaning n-grams that can be much longer than trigrams a long time ago.

However, the Yandex system uses BERT in its pipeline as well, so at some point documents and queries are converted to embeddings and nearest neighbor search techniques are employed for ranking.

The ranking process is where things begin to get more interesting.

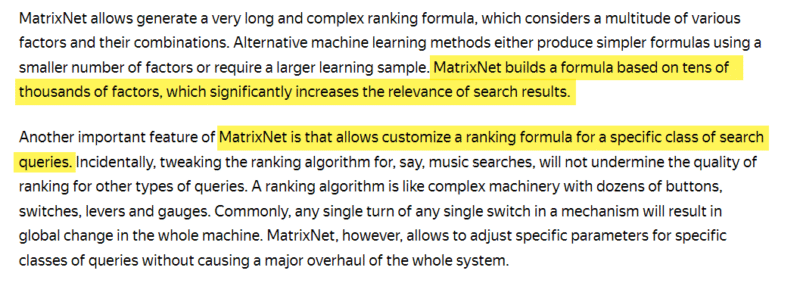

Yandex has a layer called Metasearch where cached popular search results are served after they process the query. If the results are not found there, then the search query is sent to a series of thousands of different machines in the Basic Search layer simultaneously. Each builds a posting list of relevant documents then returns it to MatrixNet, Yandex’s neural network application for re-ranking, to build the SERP.

Based on videos wherein Google engineers have talked about Search’s infrastructure, that ranking process is quite similar to Google Search. They talk about Google’s tech being in shared environments where various applications are on every machine and jobs are distributed across those machines based on the availability of computing power.

One of the use cases is exactly this, the distribution of queries to an assortment of machines to process the relevant index shards quickly. Computing the posting lists is the first place that we need to consider the ranking factors.

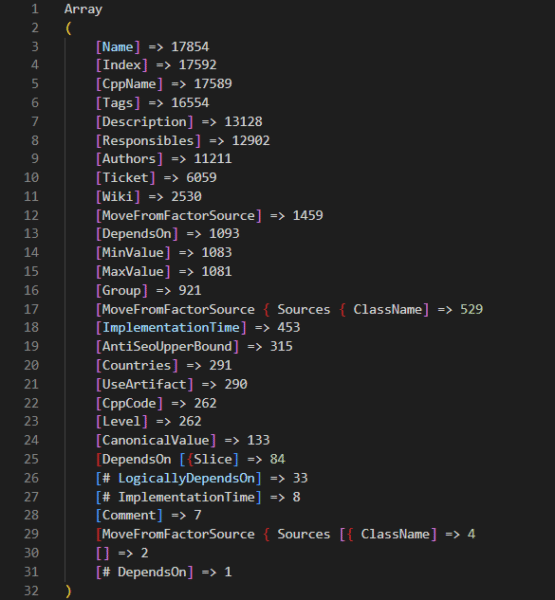

There are 17,854 ranking factors in the codebase

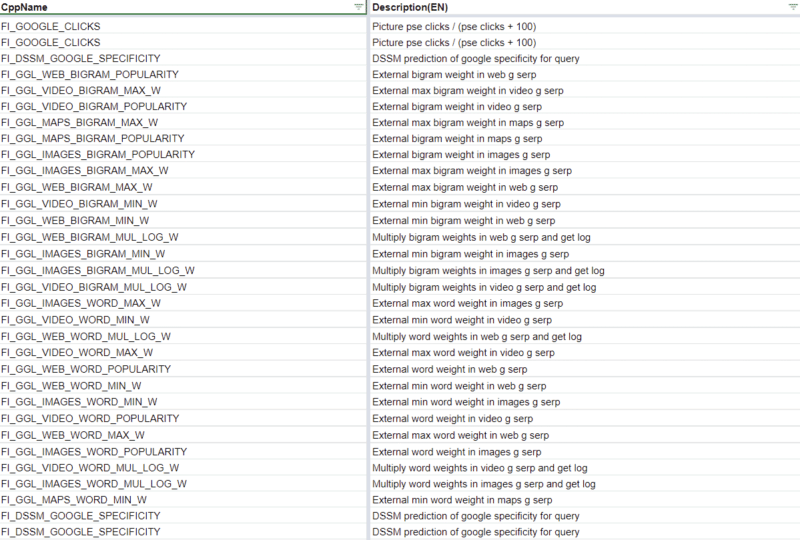

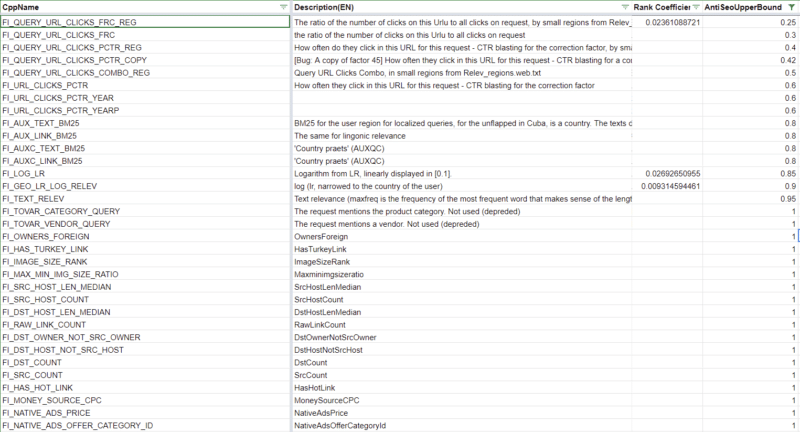

On the Friday following the leak, the inimitable Martin MacDonald eagerly shared a file from the codebase called web_factors_info/factors_gen.in. The file comes from the “Kernel” archive in the codebase leak and features 1,922 ranking factors.

Naturally, the SEO community has run with that number and that file to eagerly spread news of the insights therein. Many folks have translated the descriptions and built tools or Google Sheets and ChatGPT to make sense of the data. All of which are great examples of the power of the community. However, the 1,922 represents just one of many sets of ranking factors in the codebase.

A deeper dive into the codebase reveals that there are numerous ranking factor files for different subsets of Yandex’s query processing and ranking systems.

Combing through those, we find that there are actually 17,854 ranking factors in total. Included in those ranking factors are a variety of metrics related to:

- Clicks.

- Dwell time.

- Leveraging Yandex’s Google Analytics equivalent, Metrika.

There is also a series of Jupyter notebooks that have an additional 2,000 factors outside of those in the core code. Presumably, these Jupyter notebooks represent tests where engineers are considering additional factors to add to the codebase. Again, you can review all of these features with metadata that we collected from across the codebase at this link.

Yandex’s documentation further clarifies that they have three classes of ranking factors: Static, Dynamic, and those related specifically to the user’s search and how it was performed. In their own words:

In the codebase these are indicated in the rank factors files with the tags TG_STATIC and TG_DYNAMIC. The search related factors have multiple tags such as TG_QUERY_ONLY, TG_QUERY, TG_USER_SEARCH, and TG_USER_SEARCH_ONLY.

While we have uncovered a potential 18k ranking factors to choose from, the documentation related to MatrixNet indicates that scoring is built from tens of thousands of factors and customized based on the search query.

This indicates that the ranking environment is highly dynamic, similar to that of Google environment. According to Google’s “Framework for evaluating scoring functions” patent, they have long had something similar where multiple functions are run and the best set of results are returned.

Finally, considering that the documentation references tens of thousands of ranking factors, we should also keep in mind that there are many other files referenced in the code that are missing from the archive. So, there is likely more going on that we are unable to see. This is further illustrated by reviewing the images in the onboarding documentation which shows other directories that are not present in the archive.

For instance, I suspect there is more related to the DSSM in the /semantic-search/ directory.

The initial weighting of ranking factors

I first operated under the assumption that the codebase didn’t have any weights for the ranking factors. Then I was shocked to see that the nav_linear.h file in the /search/relevance/ directory features the initial coefficients (or weights) associated with ranking factors on full display.

This section of the code highlights 257 of the 17,000+ ranking factors we’ve identified. (Hat tip to Ryan Jones for pulling these and lining them up with the ranking factor descriptions.)

For clarity, when you think of a search engine algorithm, you’re probably thinking of a long and complex mathematical equation by which every page is scored based on a series of factors. While that is an oversimplification, the following screenshot is an excerpt of such an equation. The coefficients represent how important each factor is and the resulting computed score is what would be used to score selecter pages for relevance.

These values being hard-coded suggests that this is certainly not the only place that ranking happens. Instead, this function is most likely where the initial relevance scoring is done to generate a series of posting lists for each shard being considered for ranking. In the first patent listed above, they talk about this as a concept of query-independent relevance (QIR) which then limits documents prior to reviewing them for query-specific relevance (QSR).

The resulting posting lists are then handed off to MatrixNet with query features to compare against. So while we don’t know the specifics of the downstream operations (yet), these weights are still valuable to understand because they tell you the requirements for a page to be eligible for the consideration set.

However, that brings up the next question: what do we know about MatrixNet?

There is neural ranking code in the Kernel archive and there are numerous references to MatrixNet and “mxnet” as well as many references to Deep Structured Semantic Models (DSSM) throughout the codebase.

The description of one of the FI_MATRIXNET ranking factor indicates that MatrixNet is applied to all factors.

Factor {

Index: 160

CppName: “FI_MATRIXNET”

Name: “MatrixNet”

Tags: [TG_DOC, TG_DYNAMIC, TG_TRANS, TG_NOT_01, TG_REARR_USE, TG_L3_MODEL_VALUE, TG_FRESHNESS_FROZEN_POOL]

Description: “MatrixNet is applied to all factors – the formula”

}

There’s also a bunch of binary files that may be the pre-trained models themselves, but it’s going to take me more time to unravel those aspects of the code.

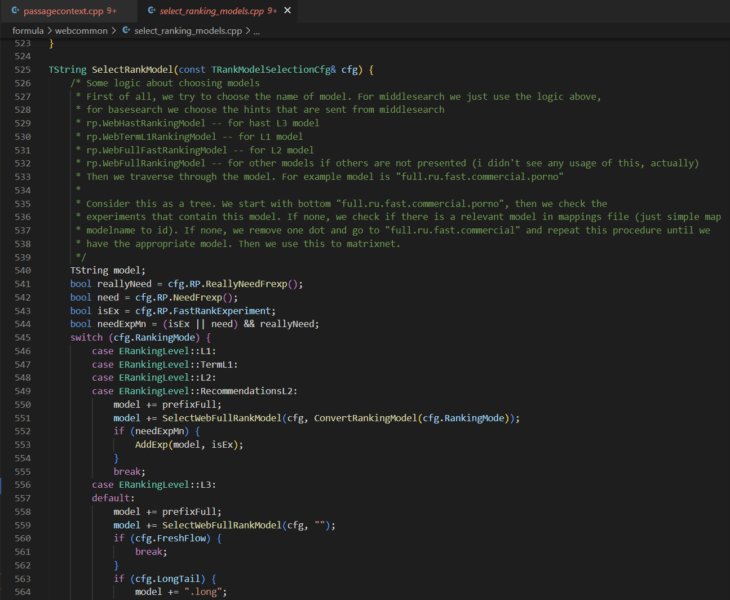

What is immediately clear is that there are multiple levels to ranking (L1, L2, L3) and there is an assortment of ranking models that can be selected at each level.

The selecting_rankings_model.cpp file suggests that different ranking models may be considered at each layer throughout the process. This is basically how neural networks work. Each level is an aspect that completes operations and their combined computations yield the re-ranked list of documents that ultimately appears as a SERP. I’ll follow up with a deep dive on MatrixNet when I have more time. For those that need a sneak peek, check out the Search result ranker patent.

For now, let’s take a look at some interesting ranking factors.

Top 5 negatively weighted initial ranking factors

The following is a list of the highest negatively weighted initial ranking factors with their weights and a brief explanation based on their descriptions translated from Russian.

- FI_ADV: -0.2509284637 -This factor determines that there is advertising of any kind on the page and issues the heaviest weighted penalty for a single ranking factor.

- FI_DATER_AGE: -0.2074373667 – This factor is the difference between the current date and the date of the document determined by a dater function. The value is 1 if the document date is the same as today, 0 if the document is 10 years or older, or if the date is not defined. This indicates that Yandex has a preference for older content.

- FI_QURL_STAT_POWER: -0.1943768768 – This factor is the number of URL impressions as it relates to the query. It seems as though they want to demote a URL that appears in many searches to promote diversity of results.

- FI_COMM_LINKS_SEO_HOSTS: -0.1809636391 – This factor is the percentage of inbound links with “commercial” anchor text. The factor reverts to 0.1 if the proportion of such links is more than 50%, otherwise, it’s set to 0.

- FI_GEO_CITY_URL_REGION_COUNTRY: -0.168645758 – This factor is the geographical coincidence of the document and the country that the user searched from. This one doesn’t quite make sense if 1 means that the document and the country match.

In summary, these factors indicate that, for the best score, you should:

- Avoid ads.

- Update older content rather than make new pages.

- Make sure most of your links have branded anchor text.

Everything else in this list is beyond your control.

Top 5 positively weighted initial ranking factors

To follow up, here’s a list of the highest weighted positive ranking factors.

- FI_URL_DOMAIN_FRACTION: +0.5640952971 – This factor is a strange masking overlap of the query versus the domain of the URL. The example given is Chelyabinsk lottery which abbreviated as chelloto. To compute this value, Yandex find three-letters that are covered (che, hel, lot, olo), see what proportion of all the three-letter combinations are in the domain name.

- FI_QUERY_DOWNER_CLICKS_COMBO: +0.3690780393 – The description of this factor is that is “cleverly combined of FRC and pseudo-CTR.” There is no immediate indication of what FRC is.

- FI_MAX_WORD_HOST_CLICKS: +0.3451158835 – This factor is the clickability of the most important word in the domain. For example, for all queries in which there is the word “wikipedia” click on wikipedia pages.

- FI_MAX_WORD_HOST_YABAR: +0.3154394573 – The factor description says “the most characteristic query word corresponding to the site, according to the bar.” I’m assuming this means the keyword most searched for in Yandex Toolbar associated to the site.

- FI_IS_COM: +0.2762504972 – The factor is that the domain is a .COM.

In other words:

- Play word games with your domain.

- Make sure it’s a dot com.

- Encourage people to search for your target keywords in the Yandex Bar.

- Keep driving clicks.

There are plenty of unexpected initial ranking factors

What’s more interesting in the initial weighted ranking factors are the unexpected ones. The following is a list of seventeen factors that stood out.

- FI_PAGE_RANK: +0.1828678331 – PageRank is the 17th highest weighted factor in Yandex. They previously removed links from their ranking system entirely, so it’s not too shocking how low it is on the list.

- FI_SPAM_KARMA: +0.00842682963 – The Spam karma is named after “antispammers” and is the likelihood that the host is spam; based on Whois information

- FI_SUBQUERY_THEME_MATCH_A: +0.1786465163 – How closely the query and the document match thematically. This is the 19th highest weighted factor.

- FI_REG_HOST_RANK: +0.1567124399 – Yandex has a host (or domain) ranking factor.

- FI_URL_LINK_PERCENT: +0.08940421124 – Ratio of links whose anchor text is a URL (rather than text) to the total number of links.

- FI_PAGE_RANK_UKR: +0.08712279101 – There is a specific Ukranian PageRank

- FI_IS_NOT_RU: +0.08128946612 – It’s a positive thing if the domain is not a .RU. Apparently, the Russian search engine doesn’t trust Russian sites.

- FI_YABAR_HOST_AVG_TIME2: +0.07417219313 – This is the average dwell time as reported by YandexBar

- FI_LERF_LR_LOG_RELEV: +0.06059448504 – This is link relevance based on the quality of each link

- FI_NUM_SLASHES: +0.05057609417 – The number of slashes in the URL is a ranking factor.

- FI_ADV_PRONOUNS_PORTION: -0.001250755075 – The proportion of pronoun nouns on the page.

- FI_TEXT_HEAD_SYN: -0.01291908335 – The presence of [query] words in the header, taking into account synonyms

- FI_PERCENT_FREQ_WORDS: -0.02021022114 – The percentage of the number of words, that are the 200 most frequent words of the language, from the number of all words of the text.

- FI_YANDEX_ADV: -0.09426121965 – Getting more specific with the distaste towards ads, Yandex penalizes pages with Yandex ads.

- FI_AURA_DOC_LOG_SHARED: -0.09768630485 – The logarithm of the number of shingles (areas of text) in the document that are not unique.

- FI_AURA_DOC_LOG_AUTHOR: -0.09727752961 – The logarithm of the number of shingles on which this owner of the document is recognized as the author.

- FI_CLASSIF_IS_SHOP: -0.1339319854 – Apparently, Yandex is going to give you less love if your page is a store.

The primary takeaway from reviewing these odd rankings factors and the array of those available across the Yandex codebase is that there are many things that could be a ranking factor.

I suspect that Google’s reported “200 signals” are actually 200 classes of signal where each signal is a composite built of many other components. In much the same way that Google Analytics has dimensions with many metrics associated, Google Search likely has classes of ranking signals composed of many features.

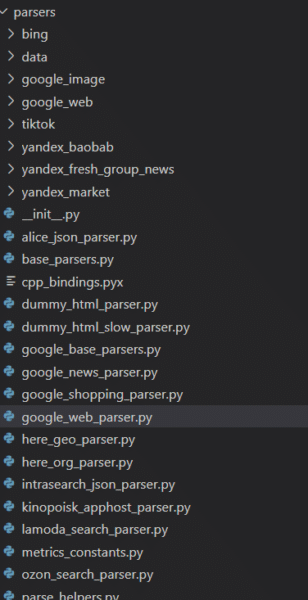

Yandex scrapes Google, Bing, YouTube and TikTok

The codebase also reveals that Yandex has many parsers for other websites and their respective services. To Westerners, the most notable of those are the ones I’ve listed in the heading above. Additionally, Yandex has parsers for a variety of services that I was unfamiliar with as well as those for its own services.

What is immediately evident, is that the parsers are feature complete. Every meaningful component of the Google SERP is extracted. In fact, anyone that might be considering scraping any of these services might do well to review this code.

There is other code that indicates Yandex is using some Google data as part of the DSSM calculations, but the 83 Google named ranking factors themselves make it clear that Yandex has leaned on the Google’s results pretty heavily.

Obviously, Google would never pull the Bing move of copying another search engine’s results nor be reliant on one for core ranking calculations.

Yandex has anti-SEO upper bounds for some ranking factors

315 ranking factors have thresholds at which any computed value beyond that indicates to the system that that feature of the page is over-optimized. 39 of these ranking factors are part of the initially weighted factors that may keep a page from being included in the initial postings list. You can find these in the spreadsheet I’ve linked to above by filtering for the Rank Coefficient and the Anti-SEO column.

It’s not far-fetched conceptually to expect that all modern search engines set thresholds on certain factors that SEOs have historically abused such as anchor text, CTR, or keyword stuffing. For instance, Bing was said to leverage the abusive usage of the meta keywords as a negative factor.

Yandex boosts “Vital Hosts”

Yandex has a series of boosting mechanisms throughout its codebase. These are artificial improvements to certain documents to ensure they score higher when being considered for ranking.

Below is a comment from the “boosting wizard” which suggests that smaller files benefit best from the boosting algorithm.

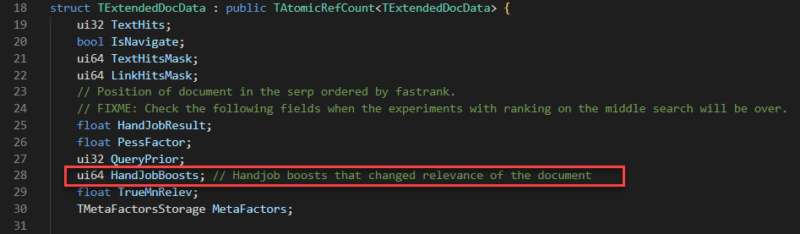

There are several types of boosts; I’ve seen one boost related to links and I’ve also seen a series of “HandJobBoosts” which I can only assume is a weird translation of “manual” changes.

One of these boosts I found particularly interesting is related to “Vital Hosts.” Where a vital host can be any site specified. Specifically mentioned in the variables is NEWS_AGENCY_RATING which leads me to believe that Yandex gives a boost that biases its results to certain news organizations.

Without getting into geopolitics, this is very different from Google in that they have been adamant about not introducing biases like this into their ranking systems.

The structure of the document server

The codebase reveals how documents are stored in Yandex’s document server. This is helpful in understanding that a search engine does not simply make a copy of the page and save it to its cache, it’s capturing various features as metadata to then use in the downstream rankings process.

The screenshot below highlights a subset of those features that are particularly interesting. Other files with SQL queries suggest that the document server has closer to 200 columns including the DOM tree, sentence lengths, fetch time, a series of dates, and antispam score, redirect chain, and whether or not the document is translated. The most complete list I’ve come across is in /robot/rthub/yql/protos/web_page_item.proto.

What’s most interesting in the subset here is the number of simhashes that are employed. Simhashes are numeric representations of content and search engines use them for lightning fast comparison for the determination of duplicate content. There are various instances in the robot archive that indicate duplicate content is explicitly demoted.

Also, as part of the indexing process, the codebase features TF-IDF, BM25, and BERT in its text processing pipeline. It’s not clear why all of these mechanisms exist in the code because there is some redundancy in using them all.

Link factors and prioritization

How Yandex handles link factors is particularly interesting because they previously disabled their impact altogether. The codebase also reveals a lot of information about link factors and how links are prioritized.

Yandex’s link spam calculator has 89 factors that it looks at. Anything marked as SF_RESERVED is deprecated. Where provided, you can find the descriptions of these factors in the Google Sheet linked above.

Notably, Yandex has a host rank and some scores that appear to live on long term after a site or page develops a reputation for spam.

Another thing Yandex does is review copy across a domain and determine if there is duplicate content with those links. This can be sitewide link placements, links on duplicate pages, or simply links with the same anchor text coming from the same site.

This illustrates how trivial it is to discount multiple links from the same source and clarifies how important it is to target more unique links from more diverse sources.

What can we apply from Yandex to what we know about Google?

Naturally, this is still the question on everyone’s mind. While there are certainly many analogs between Yandex and Google, truthfully, only a Google Software Engineer working on Search could definitively answer that question.

Yet, that is the wrong question.

Really, this code should help us expand our thinking about modern search. Much of the collective understanding of search is built from what the SEO community learned in the early 2000s through testing and from the mouths of search engineers when search was far less opaque. That unfortunately has not kept up with the rapid pace of innovation.

Insights from the many features and factors of the Yandex leak should yield more hypotheses of things to test and consider for ranking in Google. They should also introduce more things that can be parsed and measured by SEO crawling, link analysis, and ranking tools.

For instance, a measure of the cosine similarity between queries and documents using BERT embeddings could be valuable to understand versus competitor pages since it’s something that modern search engines are themselves doing.

Much in the way the AOL Search logs moved us from guessing the distribution of clicks on SERP, the Yandex codebase moves us away from the abstract to the concrete and our “it depends” statements can be better qualified.

To that end, this codebase is a gift that will keep on giving. It’s only been a weekend and we’ve already gleaned some very compelling insights from this code.

I anticipate some ambitious SEO engineers with far more time on their hands will keep digging and maybe even fill in enough of what’s missing to compile this thing and get it working. I also believe engineers at the different search engines are also going through and parsing out innovations that they can learn from and add to their systems.

Simultaneously, Google lawyers are probably drafting aggressive cease and desist letters related to all the scraping.

I’m eager to see the evolution of our space that’s driven by the curious people who will maximize this opportunity.

But, hey, if getting insights from actual code is not valuable to you, you’re welcome to go back to doing something more important like arguing about subdomains versus subdirectories.

The post Yandex scrapes Google and other SEO learnings from the source code leak appeared first on Search Engine Land.

from Search Engine Land https://ift.tt/3ZFlhum

via https://ift.tt/DgU9LRQ https://ift.tt/3ZFlhum